| ||||||||||||||||||||

|

E. Schwartz, R. Giryes, A. M. Bronstein,

"DeepISP: Towards learning an end-to-end

image processing pipeline",

IEEE Trans. Image Processing, 2018. Abstract: We present DeepISP, a full end-to-end deep neural model of the camera image signal processing (ISP) pipeline. Our model learns a mapping from the raw low-light mosaiced image to the final visually compelling image and encompasses low-level tasks such as demosaicing and denoising as well as higher-level tasks such as color correction and image adjustment. The training and evaluation of the pipeline were performed on a dedicated dataset containing pairs of low-light and well-lit images captured by a Samsung S7 smartphone camera in both raw and processed JPEG formats. The proposed solution achieves state-of-the-art performance in objective evaluation of PSNR on the subtask of joint denoising and demosaicing. For the full end-to-end pipeline, it achieves better visual quality compared to the manufacturer ISP, in both a subjective human assessment and when rated by a deep model trained for assessing image quality. |

|||||||||||||||||||

|

T. Remez, O. Litany, R. Giryes, A. M. Bronstein,

"Class-aware fully-convolutional Gaussian and Poisson denoising",

IEEE Trans. Image Processing, 2018. Abstract: We propose a fully-convolutional neural-network architecture for image denoising which is simple yet powerful. Its structure allows to exploit the gradual nature of the denoising process, in which shallow layers handle local noise statistics, while deeper layers recover edges and enhance textures. Our method advances the state-of-the-art when trained for different noise levels and distributions (both Gaussian and Poisson). In addition, we show that making the denoiser class-aware by exploiting semantic class information boosts performance, enhances textures and reduces artifacts. |

|||||||||||||||||||

|

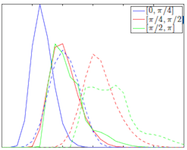

H. Haim, S. Elmalem, R. Giryes, A. M. Bronstein, E. Marom ,

"Depth Estimation from a single image using deep learned phase coded mask",

IEEE Trans. Computational Imaging, Vol. 2(3), 2018. Abstract: Depth estimation from a single image is a well-known challenge in computer vision. With the advent of deep learning, several approaches for monocular depth estimation have been proposed, all of which have inherent limitations due to the scarce depth cues that exist in a single image. Moreover, these methods are very demanding computationally, which makes them inadequate for systems with limited processing power. In this paper, a phase-coded aperture camera for depth estimation is proposed. The camera is equipped with an optical phase mask that provides unambiguous depth-related color characteristics for the captured image. These are used for estimating the scene depth map using a fully convolutional neural network. The phase-coded aperture structure is learned jointly with the network weights using backpropagation. The strong depth cues (encoded in the image by the phase mask, designed together with the network weights) allow a much simpler neural network architecture for faster and more accurate depth estimation. Performance achieved on simulated images as well as on a real optical setup is superior to the state-of-the-art monocular depth estimation methods (both with respect to the depth accuracy and required processing power), and is competitive with more complex and expensive depth estimation methods such as light-field cameras. |

|||||||||||||||||||

|

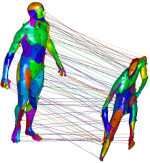

O. Litany, E. Rodolà, A. M. Bronstein, M. M. Bronstein,

"Fully spectral partial shape matching",

Computer Graphics Forum, Vol. 36(2), 2017. Abstract: We propose an efficient procedure for calculating partial dense intrinsic correspondence between deformable shapes performed entirely in the spectral domain. Our technique relies on the recently introduced partial functional maps formalism and on the joint approximate diagonalization (JAD) of the Laplace-Beltrami operators previously introduced for matching non-isometric shapes. We show that a variant of the JAD problem with an appropriately modified coupling term (surprisingly) allows to construct quasi-harmonic bases localized on the latent corresponding parts. This circumvents the need to explicitly compute the unknown parts by means of the cumbersome alternating minimization used in the previous approaches, and allows performing all the calculations in the spectral domain with constant complexity independent of the number of shape vertices. We provide an extensive evaluation of the proposed technique on standard non-rigid correspondence benchmarks and show state-of-the-art performance in various settings, including partiality and the presence of topological noise. |

|||||||||||||||||||

|

Or Litany, Tal Remez, Daniel Freedman, Lior Shapira, Alex Bronstein, Ran Gal,

"ASIST: Automatic Semantically Invariant Scene Transformation",

Computer Vision and Image Understanding, Vol. 157, pp. 284-299, 2017. Abstract: We present ASIST, a technique for transforming point clouds by replacing objects with their semantically equivalent counterparts. Transformations of this kind have applications in virtual reality, repair of fused scans, and robotics. ASIST is based on a unified formulation of semantic labeling and object replacement; both result from minimizing a single objective. We present numerical tools for the efficient solution of this optimization problem. The method is experimentally assessed on new datasets of both synthetic and real point clouds, and is additionally compared to two recent works on object replacement on data from the corresponding papers. |

|||||||||||||||||||

|

R. Giryes, G. Sapiro, A. M. Bronstein,

"Deep neural networks with random Gaussian weights: A universal classification strategy?", IEEE Trans. Signal Processing, Vol. 64(13), pp. 3444-3457, 2016. Abstract: Three important properties of a classification machinery are: (i) the system preserves the important information of the input data; (ii) the training examples convey information for unseen data; and (iii) the system is able to treat differently points from different classes. In this work we show that these fundamental properties are inherited by the architecture of deep neural networks. We formally prove that these networks with random Gaussian weights perform a distance-preserving embedding of the data, with a special treatment for in-class and out-of-class data. Similar points at the input of the network are likely to have the same The theoretical analysis of deep networks here presented exploits tools used in the compressed sensing and dictionary learning literature, thereby making a formal connection between these important topics. The derived results allow drawing conclusions on the metric learning properties of the network and their relation to its structure; and provide bounds on the required size of the training set such that the training examples would represent faithfully the unseen data. The results are validated with state-of-the-art trained networks. |

|||||||||||||||||||

|

O. Litany, E. Rodolà, A. M. Bronstein, M. M. Bronstein, D. Cremers,

"Non-rigid puzzles",

Computer Graphics Forum, Vol. 35(5), 2016. SGP Best Paper Award Abstract: Shape correspondence is a fundamental problem in computer graphics and vision, with applications in various problems including animation, texture mapping, robotic vision, medical imaging, archaeology and many more. In settings where the shapes are allowed to undergo non-rigid deformations and only partial views are available, the problem becomes very challenging. To this end, we present a non-rigid multi-part shape matching algorithm. We assume to be given a reference shape and its multiple parts undergoing a non-rigid deformation. Each of these query parts can be additionally contaminated by clutter, may overlap with other parts, and there might be missing parts or redundant ones. Our method simultaneously solves for the segmentation of the reference model, and for a dense correspondence to (subsets of) the parts. Experimental results on synthetic as well as real scans demonstrate the effectiveness of our method in dealing with this challenging matching scenario. |

|||||||||||||||||||

|

Xiao Bian, Hamid Krim, Alex Bronstein, and Liyi Dai,

"Sparsity and Nullity: Paradigms for Analysis Dictionary Learning",

SIAM J. Imaging Sci., Vol. 9, No. 3, pp. 1107–1126, 2016. Abstract: Sparse models in dictionary learning have been successfully applied in a wide variety of machine learning and computer vision problems, and as a result have recently attracted increased research interest. Another interesting related problem based on linear equality constraints, namely the sparse null space (SNS) problem, first appeared in 1986 and has since inspired results on sparse basis pursuit. In this paper, we investigate the relation between the SNS problem and the analysis dictionary learning (ADL) problem, and show that the SNS problem plays a central role, and may be utilized to solve dictionary learning problems. Moreover, we propose an efficient algorithm of sparse null space basis pursuit (SNS-BP) and extend it to a solution of ADL. Experimental results on numerical synthetic data and real-world data are further presented to validate the performance of our method. |

|||||||||||||||||||

|

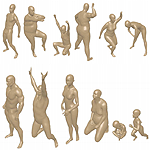

D. Pickup, X. Sun, P. L. Rosin, R. R. Martin, Z. Cheng, Z. Lian, M. Aono, A. Ben Hamza, A. M. Bronstein, M. M. Bronstein, S. Bu, U. Castellani, S. Cheng, V. Garro, A. Giachetti, A. Godil, J. Han, H. Johan, L. Lai, B. Li, C. Li, H. Li, R. Litman, X. Liu, Z. Liu, Y. Lu, A. Tatsuma, J. Ye,

"Shape Retrieval of Non-Rigid 3D Human Models",

Intl. Journal of Computer Vision (IJCV), 2016. Abstract: 3D models of humans are commonly used within computer graphics and vision, and so the ability to distinguish between body shapes is an important shape retrieval problem. We extend our recent paper which provided a benchmark for testing non-rigid 3D shape retrieval algorithms on 3D human models. This benchmark provided a far stricter challenge than previous shape benchmarks.We have added 145 new models for use as a separate training set, in order to standardise the training data used and provide a fairer comparison. We have also included experiments with the FAUST dataset of human scans. All participants of the previous benchmark study have taken part in the new tests reported here, many providing updated results using the new data. In addition, further participants have also taken part, and we provide extra analysis of the retrieval results. A total of 25 different shape retrieval methods are compared. |

|||||||||||||||||||

|

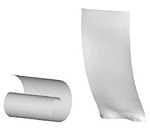

H. Haim, A. M. Bronstein, E. Marom

"Computational all-in-focus imaging using an

optical phase mask", OSA Optics Express, Vol. 23, No. 19, 2015 Abstract: A method for extended depth of field imaging based on image acquisition through a thin binary phase plate followed by fast automatic computational post-processing is presented. By placing a wavelength dependent optical mask inside the pupil of a conventional camera lens, one acquires a unique response for each of the three main color channels, which adds valuable information that allows blind reconstruction of blurred images without the need of an iterative search process for estimating the blurring kernel. The presented simulation as well as capture of a real life scene show how acquiring a one-shot image focused at a single plane, enable generating a de-blurred scene over an extended range in space. |

|||||||||||||||||||

|

Y. Aflalo, A. M. Bronstein, R. Kimmel,

"On convex relaxation of graph isomorphism", Proc. National Academy of Sciences (PNAS), February 23, 2015. Abstract: We consider the problem of exact and inexact matching of weighted undirected graphs, in which a bijective correspondence is sought to minimize a quadratic weight disagreement. This computationally challenging problem is often relaxed as a convex quadratic program, in which the space of permutations is replaced by the space of doubly stochastic matrices. However, the applicability of such a relaxation is poorly understood. We define a broad class of friendly graphs characterized by an easily verifiable spectral property. We prove that for friendly graphs, the convex relaxation is guaranteed to find the exact isomorphism or certify its inexistence. This result is further extended to approximately isomorphic graphs, for which we develop an explicit bound on the amount of weight disagreement under which the relaxation is guaranteed to find the globally optimal approximate isomorphism. We also show that in many cases, the graph matching problem can be further harmlessly relaxed to a convex quadratic program with only n separable linear equality constraints, which is substantially more efficient than the standard relaxation involving 2n equality and n^2 inequality constraints. Finally, we show that our results are still valid for unfriendly graphs if additional information in the form of seeds or attributes is allowed, with the latter satisfying an easy to verify spectral characteristic. |

|||||||||||||||||||

|

S. Korman, R. Litman, S. Avidan, A. M. Bronstein,

"Probably approximately symmetric:

Fast rigid symmetry detection with global guarantees", Computer Graphics Forum (CGF), Vol. 34/1, pp. 2-13, 2015. Abstract: We present a fast algorithm for global 3D symmetry detection with approximation guarantees. The algorithm is guaranteed to find the best approximate symmetry of a given shape, to within a user-specified threshold, with very high probability. Our method uses a carefully designed sampling of the transformation space, where each transformation is efficiently evaluated using a sub-linear algorithm. We prove that the density of the sampling depends on the total variation of the shape, allowing us to derive formal bounds on the algorithm’s complexity and approximation quality. We further investigate different volumetric shape representations (in the form of truncated distance transforms), and in such a way control the total variation of the shape and hence the sampling density and the runtime of the algorithm. A comprehensive set of experiments assesses the proposed method, including an evaluation on the eight categories of the COSEG data-set. This is the first large-scale evaluation of any symmetry detection technique that we are aware of. |

|||||||||||||||||||

|

R. Litman, A. M. Bronstein, M. M. Bronstein, U. Castellani,

"Supervised learning of bag-of-features shape descriptors using sparse coding", Computer Graphics Forum (CGF), Vol. 33/5, pp. 127-136 (special issue SGP), 2014. Abstract: We present a method for supervised learning of shape descriptors for shape retrieval applications. Many content-based shape retrieval approaches follow the bag-of-features (BoF) paradigm commonly used in text and image retrieval by first computing local shape descriptors, and then representing them in a `geometric dictionary' using vector quantization. A major drawback of such approaches is that the dictionary is constructed in an unsupervised manner using clustering, unaware of the last stage of the process (pooling of the local descriptors into a BoF, and comparison of the latter using some metric). In this paper, we replace the clustering with dictionary learning, where every atom acts as a feature, followed by sparse coding and pooling to get the final BoF descriptor. Both the dictionary and the sparse codes can be learned in the supervised regime via bi-level optimization using a task-specific objective that promotes invariance desired in the specific application. We show signficant performance improvement on several standard shape retrieval benchmarks. |

|||||||||||||||||||

|

P. Sprechmann, A. M. Bronstein, G. Sapiro,

"Learning efficient sparse and low-rank models", IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), 2015. Abstract: Parsimony, including sparsity and low rank, has been shown to successfully model data in numerous machine learning and signal processing tasks. Traditionally, parsimonious modeling approaches rely on an iterative algorithm that minimizes an objective function with parsimony-promoting terms. The inherently sequential structure and data-dependent complexity and latency of iterative optimization constitute a major limitation in many applications requiring real-time performance or involving large-scale data. Another limitation encountered by these models is the difficulty of their inclusion in supervised learning scenarios, where the higher-level training objective would depend on the solution of the lower-level pursuit problem. The resulting bilevel optimization problems are in general notoriously difficult to solve. In this paper, we propose to move the emphasis from the model to the pursuit algorithm, and develop a process-centric view of parsimonious modeling, in which a deterministic fixed-complexity pursuit process is used in lieu of iterative optimization. We show a principled way to construct learnable pursuit process architectures for structured sparse and robust low rank models from the iteration of proximal descent algorithms. These architectures approximate the exact parsimonious representation with a fraction of the complexity of the standard optimization methods. We also show that carefully chosen training regimes allow to naturally extend parsimonious models to discriminative settings. State-of-the-art results are demonstrated on several challenging problems in image and audio processing with several orders of magnitude speedup compared to the exact optimization algorithms. |

|||||||||||||||||||

|

D. Eynard, A. Kovnatsky, M. M. Bronstein, K. Glashoff, A. M. Bronstein,

"Multimodal manifold analysis using simultaneous diagonalization of Laplacians", IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), Vol. 37/12, pp. 2505-2517, 2015. Abstract: We construct an extension of spectral and diffusion geometry to multiple modalities through simultaneous diagonalization of Laplacian matrices. This naturally extends classical data analysis tools based on spectral geometry, such as diffusion maps and spectral clustering. We provide several synthetic and real examples of manifold learning, retrieval, and clustering demonstrating that the joint spectral geometry frequently better captures the inherent structure of multi-modal data. We also show the relation of many previous approaches to multimodal manifold analysis to our framework, of which the can be seen as particular cases. |

|||||||||||||||||||

|

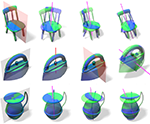

A. Kovnatsky, M. M. Bronstein, A. M. Bronstein, K. Glashoff, R. Kimmel,

"Coupled quasi-harmonic bases", Computer Graphics Forum (CGF), Vol. 32/2, pp. 439-448, 2014. Abstract: state-of-the-art approaches to shape analysis, synthesis, and correspondence rely on these natural harmonic bases that allow using classical tools from harmonic analysis on manifolds. However, many applications involving multiple shapes are obstacled by the fact that Laplacian eigenbases computed independently on different shapes are often incompatible with each other. In this paper, we propose the construction of common approximate eigenbases for multiple shapes using approximate joint diagonalization algorithms, taking as input a set of corresponding functions (e.g. indicator functions of stable regions) on the two shapes. We illustrate the benefits of the proposed approach on tasks from shape editing, pose transfer, correspondence, and similarity. |

|||||||||||||||||||

|

J. Pokrass, A. M. Bronstein, M. M. Bronstein, P. Sprechmann, G. Sapiro,

"Sparse modeling of intrinsic correspondences", Computer Graphics Forum (CGF), Vol. 32/2, pp. 459-468, 2014. Abstract: We present a novel sparse modeling approach to non-rigid shape matching using only the ability to detect repeatable regions. As the input to our algorithm, we are given only two sets of regions in two shapes; no descriptors are provided so the correspondence between the regions is not know, nor we know how many regions correspond in the two shapes. We show that even with such scarce information, it is possible to establish very accurate correspondence between the shapes by using methods from the field of sparse modeling, being this, the first non-trivial use of sparse models in shape correspondence. We formulate the problem of permuted sparse coding, in which we solve simultaneously for an unknown permutation ordering the regions on two shapes and for an unknown correspondence in functional representation. We also propose a robust variant capable of handling incomplete matches. Numerically, the problem is solved efficiently by alternating the solution of a linear assignment and a sparse coding problem. The proposed methods are evaluated qualitatively and quantitatively on standard benchmarks containing both synthetic and scanned objects. |

|||||||||||||||||||

|

J. Masci, M. M. Bronstein, A. M. Bronstein, J. Schmidhuber,

"Multimodal similarity-preserving hashing", IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), Vol. 36/4, pp. 824-830, 2014. Abstract: We introduce an efficient computational framework for hashing data belonging to multiple modalities into a single representation space where they become mutually comparable. The proposed approach is based on a novel coupled siamese neural network architecture and allows unified treatment of intra- and inter-modality similarity learning. Unlike existing cross-modality similarity learning approaches, our hashing functions are not limited to binarized linear projections and can assume arbitrarily complex forms. We show experimentally that our method significantly outperforms state-of-the-art hashing approaches on multimedia retrieval tasks. |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, N. Sochen,

"Equi-affine invariant intrinsic geometries for bendable shapes analysis", Journal of Mathematical Imaging and Vision (JMIV), Vol. 50/1-2, pp. 144-163, 2014. Abstract: Traditional models of bendable surfaces are based on the exact or approximate invariance to deformations that do not tear or stretch the shape, leaving intact an intrinsic geometry associated with it. Intrinsic geometries are typically defined using either the shortest path length (geodesic distance), or properties of heat diffusion (diffusion distance) on the surface. Both ways are implicitly derived from the metric induced by the ambient Euclidean space. In this paper, we depart from this restrictive assumption by observing that a different choice of the metric results in a richer set of geometric invariants. We extend the classic equi-affine arclength, defined on convex surfaces, to arbitrary shapes with non-vanishing gaussian curvature. As a result, a family of affine- invariant intrinsic geometries is obtained. The potential of this novel framework is explored in a wide range of applications such as shape matching and retrieval, symmetry detection, and computation of Voroni tessellation. We show that in some shape analysis tasks, our affine-invariant intrinsic geometries often outperform their Euclidean-based counterparts. |

|||||||||||||||||||

|

A. Kovnatski, D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Geometric and photometric data fusion in non-rigid shape analysis", Numerical Mathematics: Theory, Methods and Applications (NM-TMA), Vol. 6/1, pp. 199-222, 2013. Abstract: In this paper, we explore the use of the diffusion geometry framework for the fusion of geometric and photometric information in local and global shape descriptors. Our construction is based on the definition of a diffusion process on the shape manifold embedded into a high-dimensional space where the embedding coordinates represent the photometric information. Experimental results show that such data fusion is useful in coping with different challenges of shape analysis where pure geometric and pure photometric methods fail. |

|||||||||||||||||||

|

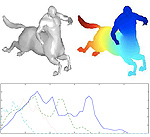

J. Pokrass, A. M. Bronstein, M. M. Bronstein,

"Partial shape matching without point-wise correspondence", Numerical Mathematics: Theory, Methods and Applications (NM-TMA), Vol. 6/1, pp. 223, 2013 Abstract: Partial similarity of shapes in a challenging problem arising in many important applications in computer vision, shape analysis, and graphics, e.g. when one has to deal with partial information and acquisition artifacts. The problem is especially hard when the underlying shapes are non-rigid and are given up to a deformation. Partial matching is usually approached by computing local descriptors on a pair of shapes and then establishing a point-wise non-bijective correspondence between the two, taking into account possibly different parts. In this paper, we introduce an alternative correspondence-less approach to matching fragments to an entire shape undergoing a non-rigid deformation. We use diffusion geometric descriptors and optimize over the integration domains on which the integral descriptors of the two parts match. The problem is regularized using the Mumford-Shah functional. We show an efficient discretization based on the Ambrosio-Tortorelli approximation generalized to triangular meshes and point clouds, and present experiments demonstrating the success of the proposed method. |

|||||||||||||||||||

|

R. Litman, and A. M. Bronstein,

"Learning spectral descriptors for deformable

shape correspondence", IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol. 36/1, pp. 171-180, 2013. Abstract: Informative and discriminative feature descriptors play a fundamental role in deformable shape analysis. For example, they have been successfully employed in correspondence, registration, and retrieval tasks. In the recent years, significant attention has been devoted to descriptors obtained from the spectral decomposition of the Laplace-Beltrami operator associated with the shape. Notable examples in this family are the heat kernel signature (HKS) and the recently introduced wave kernel signature (WKS). Laplacian-based descriptors achieve state-of-the-art performance in numerous shape analysis tasks; they are computationally efficient, isometry-invariant by construction, and can gracefully cope with a variety of transformations. In this paper, we formulate a generic family of parametric spectral descriptors. We argue that in order to be optimized for a specific task, the descriptor should take into account the statistics of the corpus of shapes to which it is applied (the "signal") and those of the class of transformations to which it is made insensitive (the "noise"). While such statistics are hard to model axiomatically, they can be learned from examples. Following the spirit of the Wiener filter in signal processing, we show a learning scheme for the construction of optimized spectral descriptors and relate it to Mahalanobis metric learning. The superiority of the proposed approach in generating correspondences is demonstrated on synthetic and scanned human figures. We also show that the learned descriptors are robust enough to be learned on synthetic data and transferred successfully to scanned shapes. Code: https://guest@vista.eng.tau.ac.il:8443/svn/main/pub/OptimalDiffusionKernels (anonymous svn; username: guest, password: empty) |

|||||||||||||||||||

|

R. Litman, A. M. Bronstein, M. M. Bronstein,

"Stable volumetric features in deformable shapes", Computers and Graphics (CAG),

Vol. 36/5, 2012. Abstract: Region feature detectors and descriptors have become a successful and popular alternative to point descriptors in image analysis due to their high robustness and repeatability, leading to a significant interest in the shape analysis community in finding analogous approaches in the 3D world. Recent works have successfully extended the maximally stable extremal region (MSER) detection algorithm to surfaces. In many applications, however, a volumetric shape model is more appropriate, and modeling shape deformations as approximate isometries of the volume of an object, rather than its boundary, better captures natural behavior of non-rigid deformations. In this paper, we formulate a diffusion-geometric framework for volumetric stable component detection and description in deformable shapes. An evaluation of our method on the SHREC’11 feature detection benchmark and SCAPE human body scans shows its potential as a source of high-quality features. Examples demonstrating the drawbacks of surface stable components and the advantage of their volumetric counterparts are also presented. |

|||||||||||||||||||

|

C. Strecha, A. M. Bronstein, M. M. Bronstein, P. Fua,

"LDAHash: improved matching with smaller descriptors",

IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), Vol. 34/1, 2012. Abstract: SIFT-like local feature descriptors are ubiquitously employed in such computer vision applications as content-based retrieval, video analysis, copy detection, object recognition, photo-tourism, and 3D reconstruction from multiple views. Feature descriptors can be designed to be invariant to certain classes of photometric and geometric transformations, in particular, affine and intensity scale transformations. However, real transformations that an image can undergo can only be approximately modeled in this way, and thus most descriptors are only approximately invariant in practice. Secondly, descriptors are usually high-dimensional (e.g. SIFT is represented as a 128-dimensional vector). In large-scale retrieval and matching problems, this can pose challenges in storing and retrieving descriptor data. We propose mapping the descriptor vectors into the Hamming space, in which the Hamming metric is used to compare the resulting representations. This way, we reduce the size of the descriptors by representing them as short binary strings and learn descriptor invariance from examples. We show extensive experimental validation, demonstrating the advantage of the proposed approach. Resources: Code | Data |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, N. Sochen,

"Affine-invariant geodesic geometry of deformable 3D shapes", Computers and Graphics (CAG), Vol. 35/3, 2011. Abstract: Natural objects can be subject to various transformations yet still preserve properties that we refer to as invariants. Here, we use definitions of affine invariant arclength for surfaces in R3 in order to extend the set of existing non-rigid shape analysis tools. We show that by re-defining the surface metric as its equi-affine version, the surface with its modified metric tensor can be treated as a canonical Euclidean object on which most classical Euclidean processing and analysis tools can be applied. The new definition of a metric is used to extend the fast marching method technique for computing geodesic distances on surfaces, where now, the distances are defined with respect to an affine invariant arclength. Applications of the proposed framework demonstrate its invariance, efficiency, and accuracy in shape analysis. |

|||||||||||||||||||

|

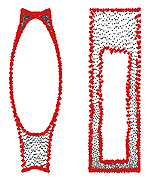

R. Litman, A. M. Bronstein, A. M. Bronstein,

"Diffusion-geometric maximally stable component detection in deformable shapes", Computers and Graphics (CAG), Vol. 35/3, 2011. Abstract: Maximally stable component detection is a very popular method for feature analysis in images, mainly due to its low computation cost and high repeatability. With the recent advance of feature-based methods in geometric shape analysis, there is significant interest in finding analogous approaches in the 3D world. In this paper, we formulate a diffusion-geometric framework for stable component detection in non-rigid 3D shapes, which can be used for geometric feature detection and description. A quantitative evaluation of our method on the SHREC’10 feature detection benchmark shows its potential as a source of high-quality features. Code: https://guest@vista.eng.tau.ac.il:8443/svn/main/pub/ShapeMSER/ (anonymous svn; username: guest, password: empty) |

|||||||||||||||||||

|

R. Kimmel, C. Zhang, A. M. Bronstein, M. M. Bronstein,

"Are MSER features really interesting?", IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol. 33/11, pp. 2316-2320, 2011. Abstract: Detection and description of affine-invariant features is a cornerstone component in numerous computer vision applications. In this note, we analyze the notion of maximally stable extremal regions (MSER) through the prism of the curvature scale space, and conclude that in its original definition, MSER prefers regular (round) regions. Arguing that interesting features in natural images usually have irregular shapes, we propose alternative definitions of MSER which are free of this bias, yet maintain their invariance properties. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Ovsjanikov, L. J. Guibas,

"Shape Google: geometric words and expressions for invariant shape retrieval", ACM Trans. Graphics (TOG), Vol. 30/1, pp. 1-20, January 2011. Abstract: The computer vision and pattern recognition communities have recently witnessed a surge of feature-based methods in object recognition and image retrieval applications. These methods allow representing images as collections of "visual words" and treat them using text search approaches following the "bag of features" paradigm. In this paper, we explore analogous approaches in the 3D world applied to the problem of non-rigid shape retrieval in large databases. Using multiscale diffusion heat kernels as "geometric words", we construct compact and informative shape descriptors by means of the "bag of features" approach. We also show that considering pairs of geometric words ("geometric expressions") allows creating spatially-sensitive bags of features with better discriminativity. Finally, adopting metric learning approaches, we show that shapes can be efficiently represented as binary codes. Our approach achieves state-of-the-art results on the SHREC 2010 large-scale shape retrieval benchmark. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein,

"Shape recognition with spectral distances", IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol. 33/5, 2011. Abstract: Recent works have shown the use of diffusion geometry for various pattern recognition applications, including non-rigid shape analysis. In this paper, we introduce spectral shape distance as a general framework for distribution-based shape similarity and show that two recent methods for shape similarity due to Rustamov and Mahmoudi & Sapiro are particular cases thereof. |

|||||||||||||||||||

|

G. Rosman, M. M. Bronstein, A. M. Bronstein, R. Kimmel,

"Nonlinear dimensionality reduction by topologically

constrained isometric embedding", Intl. Journal of Computer Vision (IJCV), Vol. 89/1, pp. 56-68, August 2010. Abstract: Many manifold learning procedures try to embed a given feature data into a flat space of low dimensionality while preserving as much as possible the metric in the natural feature space. The embedding process usually relies on distances between neighboring features, mainly since distances between features that are far apart from each other often provide an unreliable estimation of the true distance on the feature manifold due to its non-convexity. Distortions resulting from using long geodesics indiscriminately lead to a known limitation of the Isomap algorithm when used to map nonconvex manifolds. Presented is a framework for nonlinear dimensionality reduction that uses both local and global distances in order to learn the intrinsic geometry of flat manifolds with boundaries. The resulting algorithm filters out potentially problematic distances between distant feature points based on the properties of the geodesics connecting those points and their relative distance to the boundary of the feature manifold, thus avoiding an inherent limitation of the Isomap algorithm. Since the proposed algorithm matches non-local structures, it is robust to strong noise. We show experimental results demonstrating the advantages of the proposed approach over conventional dimensionality reduction techniques, both global and local in nature. |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Full and partial symmetries of non-rigid shapes", Intl. Journal of Computer Vision (IJCV), Vol. 89/1, pp. 18-39, August 2010. Abstract: Symmetry and self-similarity is the cornerstone of Nature, exhibiting itself through the shapes of natural creations and ubiquitous laws of physics. Since many natural objects are symmetric, the absence of symmetry can often be an indication of some anomaly or abnormal behavior. Therefore, detection of asymmetries is important in numerous practical applications, including crystallography, medical imaging, and face recognition, to mention a few. Conversely, the assumption of underlying shape symmetry can facilitate solutions to many problems in shape reconstruction and analysis. Traditionally, symmetries are described as extrinsic geometric properties of the shape. While being adequate for rigid shapes, such a description is inappropriate for non-rigid ones: extrinsic symmetry can be broken as a result of shape deformations, while its intrinsic symmetry is preserved. In this paper, we present a generalization of symmetries for non-rigid shapes and a numerical framework for their analysis, addressing the problems of full and partial exact and approximate symmetry detection and classification. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel, M. Mahmoudi, G. Sapiro,

"A Gromov-Hausdorff framework with diffusion geometry for topologically-robust non-rigid shape matching", Intl. Journal of Computer Vision (IJCV), Vol. 89/2-3, pp. 266-286, September 2010. Abstract: In this paper, the problem of non-rigid shape recognition is viewed from the perspective of metric geometry, and the applicability of diffusion distances within the Gromov-Hausdorff framework is explored. While the commonly used geodesic distance exploits the shortest path between points on the surface, the diffusion distance averages all paths connecting between the points. The diffusion distance provides an intrinsic distance measure which is robust, in particular to topological changes. Such changes may be a result of natural non-rigid deformations, as well as acquisition noise, in the form of holes or missing data, and representation noise due to inaccurate mesh construction. The presentation of the proposed framework is complemented with numerous examples demonstrating that in addition to the relatively low complexity involved in the computation of the diffusion distances between surface points, its recognition and matching performances favorably compare to the classical geodesic distances in the presence of topological changes between the non-rigid shapes. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, Y. Carmon, R. Kimmel,

"Partial similarity of shapes using a statistical

significance measure", IPSJ Trans. Computer Vision and Application, Vol. 1, pp. 105-114, 2009. Abstract: Partial matching of geometric structures is important in computer vision, pattern recognition and shape analysis applications. The problem consists of matching similar parts of shapes that may be dissimilar as a whole. Recently, it was proposed to consider partial similarity as a multi-criterion optimization problem trying to simultaneously maximize the similarity and the significance of the matching parts. A major challenge in that framework is providing a quantitative measure of the significance of a part of an object. Here, we define the significance of a part of a shape by its discriminative power with respect do a given shape database—that is, the uniqueness of the part. We define a point-wise significance density using a statistical weighting approach similar to the term frequency-inverse document frequency (tfidf) weighting employed in search engines. The significance measure of a given part is obtained by integrating over this density. Numerical experiments show that the proposed approach produces intuitive significant parts, and demonstrate an improvement in the performance of partial matching between shapes. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Topology-invariant similarity of nonrigid shapes", Intl. Journal of Computer Vision (IJCV), Vol. 81/3, pp. 281-301, March 2009. Abstract: This paper explores the problem of similarity criteria between nonrigid shapes. Broadly speaking, such criteria are divided into intrinsic and extrinsic, the first referring to the metric structure of the object and the latter to how it is laid out in the Euclidean space. Both criteria have their advantages and disadvantages: extrinsic similarity is sensitive to nonrigid deformations, while intrinsic similarity is sensitive to topological noise. In this paper, we approach the problem from the perspective of metric geometry. We show that by unifying the extrinsic and intrinsic similarity criteria, it is possible to obtain a stronger topology-invariant similarity, suitable for comparing deformed shapes with different topology. We construct this new joint criterion as a tradeoff between the extrinsic and intrinsic similarity and use it as a set-valued distance. Numerical results demonstrate the efficiency of our approach in cases where using either extrinsic or intrinsic criteria alone would fail. |

|||||||||||||||||||

|

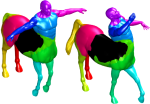

A. M. Bronstein, M. M. Bronstein, A. M. Bruckstein, R. Kimmel,

"Partial similarity of objects, or how to compare a centaur to a horse", Intl. Journal of Computer Vision (IJCV), Vol. 84/2, pp. 163-183, 2009. Abstract: Similarity is one of the most important abstract concepts in human perception of the world. In computer vision, numerous applications deal with comparing objects observed in a scene with some a priori known patterns. Often, it happens that while two objects are not similar, they have large similar parts, that is, they are partially similar. Here, we present a novel approach to quantify partial similarity using the notion of Pareto optimality. We exemplify our approach on the problems of recognizing non-rigid geometric objects, images, and analyzing text sequences. Resources: 3D non-rigid shapes dataset |

|||||||||||||||||||

|

O. Weber, Y. Devir, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Parallel algorithms for approximation of distance maps on parametric surfaces", ACM Transactions on Graphics, Vol. 27/4, October 2008. Abstract: We present an efficient O(n) numerical algorithm for first-order approximation of geodesic distances on geometry images, where n is the number of points on the surface. The structure of our algorithm allows efficient implementation on parallel architectures. Two implementations on a SIMD processor and on a GPU are discussed. Numerical results demonstrate up to four orders of magnitude improvement in execution time compared to the state-of-the-art algorithms. Resources: SSE2 code | GPU code (by Ofir Weber) | SIGGRAPH'08 trailer |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, A. M. Bruckstein, R. Kimmel,

"Analysis of two-dimensional non-rigid shapes", Intl. Journal of Computer Vision (IJCV), Vol. 78/1, pp. 67-88, June 2008. Abstract: Analysis of deformable two-dimensional shapes is an important problem, encountered in numerous pattern recognition, computer vision and computer graphics applications. In this paper, we address three major problems in the analysis of non-rigid shapes: similarity, partial similarity, and correspondence.We present an axiomatic construction of similarity criteria for deformation-invariant shape comparison, based on intrinsic geometric properties of the shapes, and show that such criteria are related to the Gromov-Hausdorff distance. Next, we extend the problem of similarity computation to shapes which have similar parts but are dissimilar when considered as a whole, and present a construction of set-valued distances, based on the notion of Pareto optimality. Finally, we show that the correspondence between non-rigid shapes can be obtained as a byproduct of the non-rigid similarity problem. As a numerical framework, we use the generalized multidimensional scaling (GMDS) method, which is the numerical core of the three problems addressed in this paper. Resources: 2D mythological creatures dataset |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Weighted distance maps computation on parametric three-dimensional manifolds", Journal of Computational Physics, Vol. 255/1, pp. 771-784, July 2007. Abstract: We propose an effcient computational solver for the eikonal equations on parametric three-dimensional manifolds. Our approach is based on the fast marching method for solving the eikonal equation in O(n log n) steps by numerically simulating wavefront propagation. The obtuse angle splitting problem is reformulated as a set of small integer linear programs, that can be solved in O(n). Numerical simulations demonstrate the accuracy of the proposed algorithm. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Calculus of non-rigid surfaces for geometry and texture manipulation", IEEE Trans. Visualization and Computer Graphics, Vol 13/5, pp. 902-913, September-October 2007. Abstract: We present a geometric framework for automatically finding intrinsic correspondence between three-dimensional nonrigid objects. We model object deformation as near isometries and find the correspondence as the minimum-distortion mapping. A generalization of multidimensional scaling is used as the numerical core of our approach. As a result, we obtain the possibility to manipulate the extrinsic geometry and the texture of the objects as vectors in a linear space. We demonstrate our method on the problems of expression-invariant texture mapping onto an animated three-dimensional face, expression exaggeration, morphing between faces, and virtual body painting. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Expression-invariant representation of faces", IEEE Trans. Image Processing, Vol. 16/1, pp. 188-197, January 2007. Abstract: Addressed here is the problem of constructing and analyzing expression-invariant representations of human faces. We demonstrate and justify experimentally a simple geometric model that allows to describe facial expressions as isometric deformations of the facial surface. The main step in the construction of expression-invariant representation of a face involves embedding of the facial intrinsic geometric structure into some convenient low-dimensional space. We study the influence of the embedding space geometry and dimensionality choice on the representation accuracy and argue that compared to its Euclidean counterpart, spherical embedding leads to notably smaller metric distortions. We experimentally support our claim showing that a smaller embedding error leads to better recognition. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Efficient computation of isometry-invariant distances between surfaces", SIAM J. Scientific Computing, Vol. 28/5, pp. 1812-1836, 2006. Abstract: We present an efficient computational framework for isometry-invariant comparison of smooth surfaces. We formulate the Gromov-Hausdorff distance as a multidimensional scaling (MDS)-like continuous optimization problem. In order to construct an efficient optimization scheme, we develop a numerical tool for interpolating geodesic distances on a sampled surface from precomputed geodesic distances between the samples. For isometry-invariant comparison of surfaces in the case of partially missing data, we present the partial embedding distance, which is computed using a similar scheme. The main idea is finding a minimum-distortion mapping from one surface to another, while considering only relevant geodesic distances. We discuss numerical implementation issues and present experimental results that demonstrate its accuracy and efficiency. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein, R. Kimmel, I. Yavneh, "Multigrid multidimensional scaling",

Numerical Linear Algebra with Applications (NLAA), Special issue on multigrid methods, Vol. 13/2-3, pp. 149-171, March-April 2006. Abstract: Multidimensional scaling (MDS) is a generic name for a family of algorithms that construct a configuration of points in a target metric space from information about inter-point distances measured in some other metric space. Large-scale MDS problems often occur in data analysis, representation and visualization. Solving such problems efficiently is of key importance in many applications. In this paper we present a multigrid framework for MDS problems. We demonstrate the performance of our algorithm on dimensionality reduction and isometric embedding problems, two classical problems requiring efficient large-scale MDS. Simulation results show that the proposed approach significantly outperforms conventional MDS algorithms. Resources: Multigrid MDS code (MATLAB) | Tutorial (MATLAB) |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Generalized multidimensional scaling: a framework for isometry-invariant partial surface matching", Proc. National Academy of Sciences (PNAS), Vol. 103/5, pp. 1168-1172, January 2006. Abstract: An efficient algorithm for isometry-invariant matching of surfaces is presented. The key idea is computing the minimum-distortion mapping between two surfaces. For this purpose, we introduce the generalized multidimensional scaling, a computationally efficient continuous optimization algorithm for finding the least distortion embedding of one surface into another. The generalized multidimensional scaling algorithm allows for both full and partial surface matching. As an example, it is applied to the problem of expression- invariant three-dimensional face recognition. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Three-dimensional face recognition",

Intl. Journal of Computer Vision (IJCV), Vol. 64/1, pp. 5-30, August 2005. Abstract: An expression-invariant 3D face recognition approach is presented. Our basic assumption is that facial expressions can be modelled as isometries of the facial surface. This allows to construct expression-invariant representations of faces using the canonical forms approach. The result is an efficient and accurate face recognition algorithm, robust to facial expressions that can distinguish between identical twins (the first two authors). We demonstrate a prototype system based on the proposed algorithm and compare its performance to classical face recognition methods. The numerical methods employed by our approach do not require the facial surface explicitly. The surface gradients field, or the surface metric, are sufficient for constructing the expression-invariant representation of any given face. It allows us to perform the 3D face recognition task while avoiding the surface reconstruction stage. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Sparse ICA for blind separation of transmitted and reflected images",

Intl. Journal of Imaging Science and Technology (IJIST), Vol. 15/1, pp. 84-91, 2005. Abstract: We address the problem of recovering a scene recorded through a semireflecting medium (i.e. planar lens), with a virtual reflected image being superimposed on the image of the scene transmitted through the semirefelecting lens. Recent studies propose imaging through a linear polarizer at several orientations to estimate the reflected and the transmitted components in the scene. In this study we extend the sparse ICA (SPICA) technique and apply it to the problem of separating the image of the scene without having any a priori knowledge about its structure or statistics. Recent novel advances in the SPICA approach are discussed. Simulation and experimental results demonstrate the efficacy of the proposed methods. |

|||||||||||||||||||

|

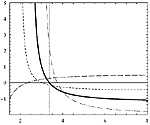

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky,

"Quasi maximum likelihood blind deconvolution: super- an sub-Gaussianity versus consistency",

IEEE Trans. Signal Processing, Vol. 53/7, pp. 2576-2579, July 2005. Abstract: In this note we consider the problem of MIMO quasi maximum likelihood (QML) blind deconvolution. We examine two classes of estimators, which are commonly believed to be suitable for super- and sub-Gaussian sources. We state the consistency conditions and demonstrate a distribution, for which the studied estimators are unsuitable, in the sense that they are asymptotically unstable. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky,

"Relative optimization for blind deconvolution", IEEE Trans. Signal Processing, Vol. 53/6, pp. 2018-2026, June 2005. Abstract: We propose a relative optimization framework for quasi maximum likelihood (QML) blind deconvolution and the relative Newton method as its particular instance. Special Hessian structure allows fast Newton system construction and solution, resulting in a fast-convergent algorithm with iteration complexity comparable to that of gradient methods. We also propose the use of rational IIR restoration kernels, which constitute a richer family of filters than the traditionally used FIR kernels. We discuss different choices of non-linear functions suitable for deconvolution of super- and sub-Gaussian sources, and formulate the conditions, under which the QML estimation is stable. Simulation results demonstrate the efficiency of the proposed methods. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Blind deconvolution of images using optimal sparse representations",

IEEE Trans. Image Processing, Vol. 14/6, pp. 726-736, June 2005. Abstract: We propose a relative optimization framework for quasi maximum likelihood (QML) blind deconvolution and the relative Newton method as its particular instance. Special Hessian structure allows fast Newton system construction and solution, resulting in a fast-convergent algorithm with iteration complexity comparable to that of gradient methods. We also propose the use of rational IIR restoration kernels, which constitute a richer family of filters than the traditionally used FIR kernels. We discuss different choices of non-linear functions suitable for deconvolution of super- and sub-Gaussian sources, and formulate the conditions, under which the QML estimation is stable. Simulation results demonstrate the efficiency of the proposed methods. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky,

"Blind source separation using block-coordinate relative Newton method",

Signal Processing, Vol. 84/8, pp. 1447-1459, August 2004. Abstract: Presented here is a generalization of the relative Newton method, recently proposed for quasi maximum likelihood blind source separation. Special structure of the Hessian matrix allows performing block-coordinate Newton descent, which significantly reduces the algorithm computational complexity and boosts its performance. Simulations based on artificial and real data showed that the separation quality using the proposed algorithm is superior compared to other accepted blind source separation methods. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Optimal nonlinear line-of-flight estimation in positron emission tomography",

IEEE Trans. Nuclear Science, Vol. 50/3, pp. 421-426, June 2003. Abstract: We consider detection of high-energy photons in PET using thick scintillation crystals. Parallax effect and multiple Compton interactions such crystals significantly reduce the accuracy of conventional detection methods. In order to estimate the photon line of flight based on photomultiplier responses, we use asymptotically optimal nonlinear techniques, implemented by feedforward and radial basis function (RBF) neural networks. Incorporation of information about angles of incidence of photons, significantly improves accuracy of estimation. The proposed estimators are fast enough to perform detection, using conventional computers. Monte-Carlo simulation results show that our approach significantly outperforms the conventional Anger algorithm. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein, M. Zibulevsky, H. Azhari,

"Reconstruction in ultrasound diffraction tomography using non-uniform FFT",

IEEE Trans. Medical Imaging, Vol. 21/11, pp. 1395-1401, November 2002. Abstract: We show an iterative reconstruction framework for diffraction ultrasound tomography. The use of broad-band illumination allows significant reduction of the number of projections compared to straight ray tomography. The proposed algorithm makes use of forward nonuniform fast Fourier transform (NUFFT) for iterative Fourier inversion. Incorporation of total variation regularization allows the reduction of noise and Gibbs phenomena while preserving the edges. The complexity of the NUFFT-based reconstruction is comparable to the frequency domain interpolation (gridding) algorithm, whereas the reconstruction accuracy (in sense of the L2 and the Linf norm) is better. |

|||||||||||||||||||

|

| ||||||||||||||||||||