| ||||||||||||||||||||

|

Q. Qiu, J. Lezama, A. Bronstein, G. Sapiro, "ForestHash: Semantic hashing with shallow random forests and tiny convolutional networks",

Proc. ECCV, 2018. Abstract: Hash codes are efficient data representations for coping with the ever growing amounts of data. In this paper, we introduce a random forest semantic hashing scheme that embeds tiny convolutional neural networks (CNN) into shallow random forests, with near-optimal information-theoretic code aggregation among trees. We start with a simple hashing scheme, where random trees in a forest act as hashing functions by setting `1' for the visited tree leaf, and `0' for the rest. We show that traditional random forests fail to generate hashes that preserve the underlying similarity between the trees, rendering the random forests approach to hashing challenging. To address this, we propose to first randomly group arriving classes at each tree split node into two groups, obtaining a significantly simplified two-class classification problem, which can be handled using a light-weight CNN weak learner. Such random class grouping scheme enables code uniqueness by enforcing each class to share its code with different classes in different trees. A non-conventional low-rank loss is further adopted for the CNN weak learners to encourage code consistency by minimizing intra-class variations and maximizing inter-class distance for the two random class groups. Finally, we introduce an information-theoretic approach for aggregating codes of individual trees into a single hash code, producing a near-optimal unique hash for each class. The proposed approach significantly outperforms state-of-the-art hashing methods for image retrieval tasks on large-scale public datasets, while performing at the level of other state-of-the-art image classification techniques while utilizing a more compact and efficient scalable representation. This work proposes a principled and robust procedure to train and deploy in parallel an ensemble of light-weight CNNs, instead of simply going deeper. |

|||||||||||||||||||

|

A. Tsitsulin, D. Mottin, P. Karras, A. Bronstein, E, Mueller, "NetLSD: Hearing the shape of a graph",

Proc. KDD, 2018. Abstract: Comparison among graphs is ubiquitous in graph analytics. However, it is a hard task in terms of the expressiveness of the employed similarity measure and the efficiency of its computation. Ideally, graph comparison should be invariant to the order of nodes and the sizes of compared graphs, adaptive to the scale of graph patterns, and scalable. Unfortunately, these properties have not been addressed together. Graph comparisons still rely on direct approaches, graph kernels, or representation-based methods, which are all inefficient and impractical for large graph collections. In this paper, we propose the Network Laplacian Spectral Descriptor (NetLSD): the first, to our knowledge, permutation- and size-invariant, scale-adaptive, and efficiently computable graph representation method that allows for straightforward comparisons of large graphs. NetLSD extracts a compact signature that inherits the formal properties of the Laplacian spectrum, specifically its heat or wave kernel; thus, it hears the shape of a graph. Our evaluation on a variety of real-world graphs demonstrates that it outperforms previous works in both expressiveness and efficiency. |

|||||||||||||||||||

|

E. Tsitsin, A. M. Bronstein, T. Hendler, M. Medvedovsky, "Passive electric impedance tomography",

Proc. EIT, 2018. Abstract: We introduce an electric impedance tomography modality without any active current injection. By loading the probe electrodes with a time-varying network of impedances, the proposed technique exploits electrical fields existing in the medium due to biological activity or EM interference from the environment or an implantable device. A phantom validation of the technique is presented. |

|||||||||||||||||||

|

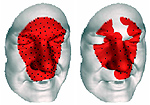

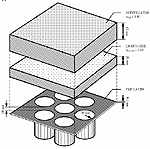

E. Tsitsin, T. Mund, A. M. Bronstein,, "Printable anisotropic phantom for EEG with distributed current sources",

Proc. ISBI, 2018. Abstract: We introduce an electric impedance tomography modality without any active current injection. By loading the probe electrodes with a time-varying network of impedances, the proposed technique exploits electrical fields existing in the medium due to biological activity or EM interference from the environment or an implaPresented is the phantom mimicking the electromagnetic properties of the human head. The fabrication is based on the additive manufacturing (3d-printing) technology combined with the electrically conductive gel. The novel key features of the phantom are the controllable anisotropic electrical conductivity of the skull and the densely packed actively multiplexed monopolar current sources permitting interpolation of the measured gain function to any dipolar current source position and orientation within the head. The phantom was tested in realistic environment successfully simulating the possible signals from neural activations situated at any depth within the brain as well as EMI and motion artifacts. The proposed design can be readily repeated in any lab having an access to a standard 100 micron precision 3d-printer. The meshes of the phantom are available from the corresponding author.ntable device. A phantom validation of the technique is presented. |

|||||||||||||||||||

|

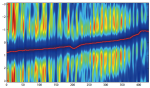

E. Tsitsin, M. Medvedovsky, A. M. Bronstein,, "VibroEEG: Improved EEG source reconstruction by combined acoustic-electric imaging",

Proc. ISBI, 2018. Abstract: Electroencephalography (EEG) is the electrical neural activity recording modality with high temporal and low spatial resolution. Here we propose a novel technique that we call vibroEEG improving significantly the source localization accuracy of EEG. Our method combines electric potential acquisition in concert with acoustic excitation of the vibrational modes of the electrically active cerebral cortex which displace periodically the sources of the low frequency neural electrical activity. The sources residing on the maxima of the induced modes will be maximally weighted in the corresponding spectral components of the broadband signals measured on the noninvasive electrodes. In vibroEEG, for the first time the rich internal geometry of the cerebral cortex can be utilized to separate sources of neural activity lying close in the sense of the Euclidean metric. When the modes are excited locally using phased arrays the neural activity can essentially be probed at any cortical location. When a single transducer is used to induce the excitations, the EEG gain matrix is still being enriched with numerous independent gain vectors increasing its rank. We show theoretically and on numerical simulation that in both cases the source localization accuracy improves substantially. |

|||||||||||||||||||

|

T. Remez, O. Litany, R. Giryes, A. Bronstein, "Deep class-aware image denoising",

Proc. ICIP, 2017. Abstract: The increasing demand for high image quality in mobile devices brings forth the need for better computational enhancement techniques, and image denoising in particular. To this end, we propose a new fully convolutional deep neural network architecture which is simple yet powerful and achieves state-of-the-art performance for additive Gaussian noise removal. Furthermore, we claim that the personal photo-collections can usually be categorized into a small set of semantic classes. However simple, this observation has not been exploited in image denoising until now. We show that a significant boost in performance of up to 0.4dB PSNR can be achieved by making our network class-aware, namely, by fine-tuning it for images belonging to a specific semantic class. Relying on the hugely successful existing image classifiers, this research advocates for using a class-aware approach in all image enhancement tasks. |

|||||||||||||||||||

|

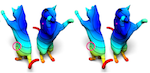

O. Litany, T. Remez, E. Rodolà, A. M. Bronstein, M. M. Bronstein, "Deep Functional Maps: Structured prediction for dense shape correspondence",

Proc. ICCV, 2017. Abstract: We introduce a new framework for learning dense correspondence between deformable 3D shapes. Existing learning based approaches model shape correspondence as a labelling problem, where each point of a query shape receives a label identifying a point on some reference domain; the correspondence is then constructed a posteriori by composing the label predictions of two input shapes. We propose a paradigm shift and design a structured prediction model in the space of functional maps, linear operators that provide a compact representation of the correspondence. We model the learning process via a deep residual network which takes dense descriptor fields defined on two shapes as input, and outputs a soft map between the two given objects. The resulting correspondence is shown to be accurate on several challenging benchmarks comprising multiple categories, synthetic models, real scans with acquisition artifacts, topological noise, and partiality. |

|||||||||||||||||||

|

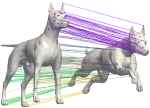

Z. Laehner, M. Vestner, A. Boyarski, O. Litany, R. Slossberg, T. Remez, E. Rodolà, A. Bronstein, M. Bronstein, R. Kimmel, D. Cremers, "Efficient deformable shape correspondence via kernel matching",

Proc. 3DV, 2017. Abstract: We present a method to match three dimensional shapes under non-isometric deformations, topology changes and partiality. We formulate the problem as matching between a set of pair-wise and point-wise descriptors, imposing a continuity prior on the mapping, and propose a projected descent optimization procedure inspired by difference of convex functions (DC) programming. Surprisingly, in spite of the highly non-convex nature of the resulting quadratic assignment problem, our method converges to a semantically meaningful and continuous mapping in most of our experiments, and scales well. We provide preliminary theoretical analysis and several interpretations of the method. |

|||||||||||||||||||

|

G. Alexandroni, Y. Podolsky, H. Greenspan, T. Remez, O. Litany,

A. M. Bronstein, R. Giryes, "White matter fiber representation using

continuous dictionary learning",

Proc. MICCAI, 2017. Abstract: With increasingly sophisticated Diffusion Weighted MRI acquisition methods and modelling techniques, very large sets of streamlines (fibers) are presently generated per imaged brain. These reconstructions of white matter architecture, which are important for human brain research and pre-surgical planning, require a large amount of storage and are often unwieldy and difficult to manipulate and analyze. This work proposes a novel continuous parsimonious framework in which signals are sparsely represented in a dictionary with continuous atoms. The significant innovation in our new methodology is the ability to train such continuous dictionaries, unlike previous approaches that either used pre-fixed continuous transforms or training with finite atoms. This leads to an innovative fiber representation method, which uses Continuous Dictionary Learning to sparsely code each fiber with high accuracy. This method is tested on numerous tractograms produced from the Human Connectome Project data and achieves state-of-the-art performances in compression ratio and reconstruction error. |

|||||||||||||||||||

|

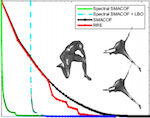

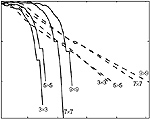

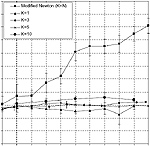

A. Boyarski, A. M. Bronstein, M. M. Bronstein, "Subspace least squares multidimensional scaling",

Proc. SSVM, 2017. Abstract: Multidimensional Scaling (MDS) is one of the most popular methods for dimensionality reduction and visualization of high dimensional data. Apart from these tasks, it also found applications in the field of geometry processing for the analysis and reconstruction of non-rigid shapes. In this regard, MDS can be thought of as a shape from metric algorithm, consisting of finding a configuration of points in the Euclidean space that realize, as isometrically as possible, some given distance structure. In the present work we cast the least squares variant of MDS (LS-MDS) in the spectral domain. This uncovers a multiresolution property of distance scaling which speeds up the optimization by a significant amount, while producing comparable, and sometimes even better, embeddings. |

|||||||||||||||||||

|

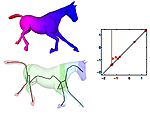

M. Vestner, R. Litman, E. Rodolà, A. Bronstein, D. Cremers, "Product Manifold Filter: Non-rigid shape correspondence

via kernel density estimation in the product space",

Proc. CVPR, 2017. Abstract: Many algorithms for the computation of correspondences between deformable shapes rely on some variant of nearest neighbor matching in a descriptor space. Such are, for example, various point-wise correspondence recovery algorithms used as a post-processing stage in the functional correspondence framework. Such frequently used techniques implicitly make restrictive assumptions (e.g., nearisometry) on the considered shapes and in practice suffer from lack of accuracy and result in poor surjectivity. We propose an alternative recovery technique capable of guaranteeing a bijective correspondence and producing significantly higher accuracy and smoothness. Unlike other methods our approach does not depend on the assumption that the analyzed shapes are isometric. We derive the proposed method from the statistical framework of kernel density estimation and demonstrate its performance on several challenging deformable 3D shape matching datasets. |

|||||||||||||||||||

|

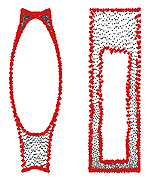

A. Bronstein, Y. Choukroun, R. Kimmel, M. Sela, "Consistent discretization and minimization of the L1 norm on manifolds",

Proc. 3DV, 2016. Abstract: The L1 norm has been tremendously popular in signal and image processing in the past two decades due to its sparsity-promoting properties. More recently, its generalization to non-Euclidean domains has been found useful in shape analysis applications. For example, in conjunction with the minimization of the Dirichlet energy, it was shown to produce a compactly supported quasi-harmonic orthonormal basis, dubbed as compressed manifold modes. The continuous L1 norm on the manifold is often replaced by the vector l1 norm applied to sampled functions. We show that such an approach is incorrect in the sense that it does not consistently discretize the continuous norm and warn against its sensitivity to the specific sampling. We propose two alternative discretizations resulting in an iteratively-reweighed l2 norm. We demonstrate the proposed strategy on the compressed modes problem, which reduces to a sequence of simple eigendecomposition problems not requiring non-convex optimization on Stiefel manifolds and producing more stable and accurate results. |

|||||||||||||||||||

|

R. Litman, A. Bronstein, "SpectroMeter: Amortized sublinear spectral approximation of distance on graphs",

Proc. 3DV, 2016. Abstract: We present a method to approximate pairwise distance on a graph, having an amortized sub-linear complexity in its size. The proposed method follows the so called heat method due to Crane et al. The only additional input are the values of the eigenfunctions of the graph Laplacian at a subset of the vertices. Using these values we estimate a random walk from the source points, and normalize the result into a unit gradient function. The eigenfunctions are then used to synthesize distance values abiding by these constraints at desired locations. We show that this method works in practice on different types of inputs ranging from triangular meshes to general graphs. We also demonstrate that the resulting approximate distance is accurate enough to be used as the input to a recent method for intrinsic shape correspondence computation. |

|||||||||||||||||||

|

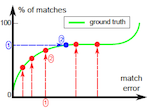

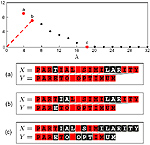

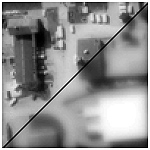

R. Litman, S. Korman, A. M. Bronstein, S. Avidan, "GMD: Global model detection via inlier rate estimation",

Proc. Computer Vision and Pattern Recognition (CVPR), 2015. Abstract: This work presents a novel approach for detecting inliers in a given set of correspondences (matches). It does so without explicitly identifying any consensus set, based on a method for inlier rate estimation (IRE). Given such an estimator for the inlier rate, we also present an algorithm that detects a globally optimal transformation. We provide a theoretical analysis of the IRE method using a stochastic generative model on the continuous spaces of matches and transformations. This model allows rigorous investigation of the limits of our IRE method for the case of 2D translation, further giving bounds and insights for the more general case. Our theoretical analysis is validated empirically and is shown to hold in practice for the more general case of 2D affinities. In addition, we show that the combined framework works on challenging cases of 2D homography estimation, with very few and possibly noisy inliers, where RANSAC generally fails. |

|||||||||||||||||||

|

X. Bian, H. Krim, A. M. Bronstein, L. Dai, "Sparse null space basis pursuit and analysis dictionary learning for

high-dimensional data analysis",

Proc. International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2015. Abstract: Sparse models in dictionary learning have been successfully applied in a wide variety of machine learning and computer vision problems, and have also recently been of increasing research interest. Another interesting related problem based on a linear equality constraint, namely the sparse null space problem (SNS), first appeared in 1986, and has since inspired results on sparse basis pursuit. In this paper, we investigate the relation between the SNS problem and the analysis dictionary learning problem, and show that the SNS problem plays a central role, and may be utilized to solve dictionary learning problems. Moreover, we propose an efficient algorithm of sparse null space basis pursuit, and extend it to a solution of analysis dictionary learning. Experimental results on numerical synthetic data and realworld data are further presented to validate the performance of our method. |

||||||||||||||||||||

|

I. Sipiran, B. Bustos, T. Schreck, A. M. Bronstein, M. M. Bronstein, U. Castellani, S. Choi,

L. Lai, H. Li, R. Litman, L. Sun,

"SHREC'15 Track: Scalability of non-rigid 3D shape

retrieval",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2015. Abstract: Due to recent advances in 3D acquisition and modeling, increasingly large amounts of 3D shape data become available in many application domains. This rises not only the need for effective methods for 3D shape retrieval, but also efficient retrieval and robust implementations. Previous 3D retrieval challenges have mainly considered data sets in the range of a few thousands of queries. In the 2015 SHREC track on Scalability of 3D Shape Retrieval we provide a benchmark with more than 96 thousand shapes. The data set is based on a non-rigid retrieval benchmark enhanced by other existing shape benchmarks. From the baseline models, a large set of partial objects were automatically created by simulating a range-image acquisition process. Four teams have participated in the track, with most methods providing very good to near-perfect retrieval results, and one less complex baseline method providing fair performance. Timing results indicate that three of the methods including the latter baseline one provide near- interactive time query execution. Generally, the cost of data pre-processing varies depending on the method. |

|||||||||||||||||||

|

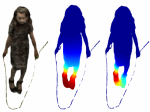

O. Menashe, A. M. Bronstein, "Real-time compressed imaging of scattering volumes",

Proc. International Conference on Image Processing (ICIP), 2014. Abstract: We propose a method and a prototype imaging system for real-time reconstruction of volumetric piecewise-smooth scattering media. The volume is illuminated by a sequence of structured binary patterns emitted from a fan beam projector, and the scattered light is collected by a two-dimensional sensor, thus creating an under-complete set of compressed measurements. We show a fixed-complexity and latency reconstruction algorithm capable of estimating the scattering coefficients in real-time. We also show a simple greedy algorithm for learning the optimal illumination patterns. Our results demonstrate faithful reconstruction from highly compressed measurements. Furthermore, a method for compressed registration of the measured volume to a known template is presented, showing excellent alignment with just a single projection. Though our prototype system operates in visible light, the presented methodology is suitable for fast x-ray scattering imaging, in particular in real-time vascular medical imaging. |

|||||||||||||||||||

|

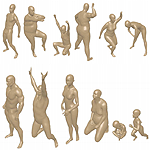

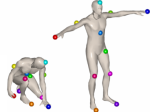

D. Pickup, X. Sun, P. L. Rosin, R. R. Martin, Z. Cheng, Z. Lian, M. Aono, A. Ben Hamza, A. M. Bronstein, M. M. Bronstein, S. Bu, U. Castellani, S. Cheng, V. Garro, A. Giachetti, A. Godil, J. Han, H. Johan, L. Lai, B. Li, C. Li, H. Li, R. Litman, X. Liu, Z. Liu, Y. Lu, A. Tatsuma, J. Ye,

"Shape Retrieval of Non-Rigid 3D Human Models",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2014. Abstract: We have created a new dataset for non-rigid 3D shape retrieval, one that is much more challenging than existing datasets. Our dataset features exclusively human models, in a variety of body shapes and poses. 3D models of humans are commonly used within computer graphics and vision, therefore the ability to distinguish between body shapes is an important feature for shape retrieval methods. In this track nine groups have submitted the results of a total of 22 different methods which have been tested on our new dataset. |

|||||||||||||||||||

|

S. Biasotti, A. Cerri, A. M. Bronstein, M. M. Bronstein,

"Quantifying 3D shape similarity using maps:

Recent trends, applications and perspectives", Proc. EUROGRAPHICS STARS, 2014. Abstract: Shape similarity is an acute issue in Computer Vision and Computer Graphics that involves many aspects of human perception of the real world, including judged and perceived similarity concepts, deterministic and probabilistic decisions and their formalization. 3D models carry multiple information with them (e.g., geometry, topology, texture, time evolution, appearance), which can be thought as the filter that drives the recognition process. Assessing and quantifying the similarity between 3D shapes is necessary to explore large dataset of shapes, and tune the analysis framework to the userÕs needs. Many efforts have been done in this sense, including several attempts to formalize suitable notions of similarity and distance among 3D objects and their shapes. In the last years, 3D shape analysis knew a rapidly growing interest in a number of challenging issues, ranging from deformable shape similarity to partial matching and view-point selection. In this panorama, we focus on methods which quantify shape similarity (between two objects and sets of models) and compare these shapes in terms of their properties (i.e., global and local, geometric, differential and topological) conveyed by (sets of) maps. After presenting in detail the theoretical foundations underlying these methods, we review their usage in a number of 3D shape application domains, ranging from matching and retrieval to annotation and segmentation. Particular emphasis will be given to analyze the suitability of the different methods for specific classes of shapes (e.g. rigid or isometric shapes), as well as the flexibility of the various methods at the different stages of the shape comparison process. Finally, the most promising directions for future research developments are discussed. |

|||||||||||||||||||

|

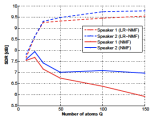

P. Sprechmann, A. M. Bronstein, G. Sapiro, "Supervised non-Euclidean sparse NMF via bilevel optimization with applications to speech enhancement",

Proc. Joint Workshop on Hands-free Speech Communication and Microphone Arrays (HSCMA), 2014. Abstract: Traditionally, NMF algorithms consist of two separate stages: a training stage, in which a generative model is learned; and a testing stage in which the pre-learned model is used in a high level task such as enhancement, separation, or classification. As an alternative, we propose a task-supervised NMF method for the adaptation of the basis spectra learned in the first stage to enhance the performance on the specific task used in the second stage. We cast this problem as a bilevel optimization program that can be efficiently solved via stochastic gradient descent. The proposed approach is general enough to handle sparsity priors of the activations, and allow non-Euclidean data terms such as beta-divergences. The framework is evaluated on single-channel speech enhancement tasks. |

||||||||||||||||||||

|

J. Masci, A. M. Bronstein, M. M. Bronstein, P. Sprechmann, G. Sapiro, "Sparse similarity-preserving hashing",

Proc. International Conference on Learning Representations (ICLR), 2014. Abstract: In recent years, a lot of attention has been devoted to efficient nearest neighbor search by means of similarity-preserving hashing. One of the plights of existing hashing techniques is the intrinsic trade-off between performance and computational complexity: while longer hash codes allow for lower false positive rates, it is very difficult to increase the embedding dimensionality without incurring in very high false negatives rates or prohibiting computational costs. In this paper, we propose a way to overcome this limitation by enforcing the hash codes to be sparse. Sparse high-dimensional codes enjoy from the low false positive rates typical of long hashes, while keeping the false negative rates similar to those of a shorter dense hashing scheme with equal number of degrees of freedom. We use a tailored feed-forward neural network for the hashing function. Extensive experimental evaluation involving visual and multi-modal data shows the benefits of the proposed method. |

|||||||||||||||||||

|

|

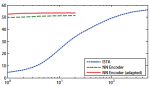

P. Sprechmann, R. Litman, T. Ben Yakar, A. M. Bronstein, G. Sapiro,

"Efficient supervised sparse analysis and synthesis operators",

Proc. Neural Information Proc. Systems (NIPS), 2013. Abstract: In this paper, we propose a new and computationally efficient framework for learning sparse models. We formulate a unified approach that contains as particular cases models promoting sparse synthesis and analysis type of priors, and mixtures thereof. The supervised training of the proposed model is formulated as a bilevel optimization problem, in which the operators are optimized to achieve the best possible performance on a specific task, e.g., reconstruction or classification. By restricting the operators to be shift invariant, our approach can be thought as a way of learning analysis+synthesis sparsity-promoting convolutional operators. Leveraging recent ideas on fast trainable regressors designed to approximate exact sparse codes, we propose a way of constructing feed-forward neural networks capable of approximating the learned models at a fraction of the computational cost of exact solvers. In the shift-invariant case, this leads to a principled way of constructing task-specific convolutional networks. We illustrate the proposed models on several experiments in music analysis and image processing applications. |

|||||||||||||||||||

|

T. Ben Yakar, R. Litman, P. Sprechmann, A. M. Bronstein, G. Sapiro,

"Bilevel sparse models for polyphonic music transcription",

Proc. Annual Conference of the Intl. Society for Music Info. Retrieval (ISMIR), 2013. Abstract: In this work, we propose a trainable sparse model for automatic polyphonic music transcription, which incorporates several successful approaches into a unified optimization framework. Our model combines unsupervised synthesis models similar to latent component analysis and nonnegative factorization with metric learning techniques that allow supervised discriminative learning. We develop efficient stochastic gradient training schemes allowing unsupervised, semi-, and fully supervised training of the model as well its adaptation to test data. We show efficient fixed complexity and latency approximation that can replace iterative minimization algorithms in time-critical applications. Experimental evaluation on synthetic and real data shows promising initial results. |

|||||||||||||||||||

|

P. Sprechmann, A. M. Bronstein, J.-M. Morel, G. Sapiro

"Audio restoration from multiple copies",

Proc. International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2013. Abstract: A method for removing impulse noise from audio signals by fusing multiple copies of the same recording is introduced in this paper. The proposed algorithm exploits the fact that while in general multiple copies of a given recording are available, all sharing the same master, most degradations in audio signals are record-dependent. Our method first seeks for the optimal non-rigid alignment of the signals that is robust to the presence of sparse outliers with arbitrary magnitude. Unlike previous approaches, we simultaneously find the optimal alignment of the signals and impulsive degradation. This is obtained via continuous dynamic time warping computed solving an Eikonal equation. We propose to use our approach in the derivative domain, reconstructing the signal by solving an inverse problem that resembles the Poisson image editing technique. The proposed framework is here illustrated and tested in the restoration of old gramophone recordings showing promising results; however, it can be used in other application where different copies of the signal of interest are available and the degradations are copy-dependent. |

|||||||||||||||||||

|

P. Sprechmann, A. M. Bronstein, M. M. Bronstein, G. Sapiro

"Learnable low rank sparse models for speech denoising",

Proc. International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2013. Abstract: In this paper we present a framework for real time enhancement of speech signals. Our method leverages a new process-centric approach for sparse and parsimonious models, where the representation pursuit is obtained applying a deterministic function or process rather than solving an optimization problem. We first propose a rank-regularized robust version of non-negative matrix factorization (NMF) for modeling time-frequency representations of speech signals in which the spectral frames are decomposed as sparse linear combinations of atoms of a low-rank dictionary. Then, a parametric family of pursuit processes is derived from the iteration of the proximal descent method for solving this model. We present several experiments showing successful results and the potential of the proposed framework. Incorporating discriminative learning makes the proposed method significantly outperform exact NMF algorithms, with fixed latency and at a fraction of it's computational complexity. |

|||||||||||||||||||

|

O. Litany, A. M. Bronstein, M. M. Bronstein,

"Putting the pieces together: regularized multi-shape partial matching",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2012. Abstract: Multi-part shape matching in an important class of problems, arising in many fields such as computational archaeology, biology, geometry processing, computer graphics and vision. In this paper, we address the problem of simultaneous matching and segmentation of multiple shapes. We assume to be given a reference shape and multiple parts partially matching the reference. Each of these parts can have additional clutter, have overlap with other parts, or there might be missing parts. We show experimental results of efficient and accurate assembly of fractured synthetic and real objects. |

|||||||||||||||||||

|

A. Kovnatsky, A. M. Bronstein, M. M. Bronstein,

"Stable spectral mesh filtering",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2012. Abstract: The rapid development of 3D acquisition technology has brought with itself the need to perform standard signal processing operations such as filters on 3D data. It has been shown that the eigenfunctions of the Laplace-Beltrami operator (manifold harmonics) of a surface play the role of the Fourier basis in the Euclidean space; it is thus possible to formulate signal analysis and synthesis in the manifold harmonics basis. In particular, geometry filtering can be carried out in the manifold harmonics domain by decomposing the embedding coordinates of the shape in this basis. However, since the basis functions depend on the shape itself, such filtering is valid only for weak (near all-pass) filters, and produces severe artifacts otherwise. In this paper, we analyze this problem and propose the fractional filtering approach, wherein we apply iteratively weak fractional powers of the filter, followed by the update of the basis functions. Experimental results show that such a process produces more plausible and meaningful results. |

|||||||||||||||||||

|

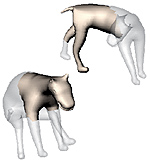

G. Rosman, A. M. Bronstein, M. M. Bronstein, X.-C. Tai, R. Kimmel,

"Group-valued regularization for analysis of

articulated motion",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2012. Abstract: We present a novel method for estimation of articulated motion in depth scans. The method is based on a framework for regularization of vector- and matrix- valued functions on parametric surfaces. We extend augmented-Lagrangian total variation regularization to smooth rigid motion cues on the scanned 3D surface obtained from a range scanner. We demonstrate the resulting smoothed motion maps to be a powerful tool in articulated scene understanding, providing a basis for rigid parts segmentation, with little prior assumptions on the scene, despite the noisy depth measurements that often appear in commodity depth scanners. |

|||||||||||||||||||

|

P. Sprechmann, A. M. Bronstein, G. Sapiro,

"Real-time online singing voice separation from monaural recordings using

robust low-rank modeling",

Proc. Annual Conference of the Intl. Society for Music Info. Retrieval (ISMIR), 2012. Abstract: Separating the leading vocals from the musical accompaniment is a challenging task that appears naturally in several music processing applications. Robust principal component analysis (RPCA) has been recently employed to this problem producing very successful results. The method decomposes the signal into a low-rank component corresponding to the accompaniment with its repetitive structure, and a sparse component corresponding to the voice with its quasi-harmonic structure. In this paper we first introduce a non-negative variant of RPCA, termed as robust low-rank non-negative matrix factorization (RNMF). This new framework better suits audio applications. We then propose two efficient feed-forward architectures that approximate the RPCA and RNMF with low latency and a fraction of the complexity of the original optimization method. These approximants allow incorporating elements of unsupervised, semi- and fully-supervised learning into the RPCA and RNMF frameworks. Our basic implementation shows several orders of magnitude speedup compared to the exact solvers with no performance degradation, and allows online and faster-than-real-time processing. Evaluation on the MIR-1K dataset demonstrates state-of-the-art performance. |

|||||||||||||||||||

|

P. Sprechmann, A. M. Bronstein, G. Sapiro,

"Learning efficient structured sparse models",

Proc. Intl. Conference on Machine Learning (ICML), 2012. Abstract: We present a comprehensive framework for structured sparse coding and modeling extending the recent ideas of using learnable fast regressors to approximate exact sparse codes. For this purpose, we propose an efficient feed forward architecture derived from the iteration of the block-coordinate algorithm. This architecture approximates the exact structured sparse codes with a fraction of the complexity of the standard optimization methods. We also show that by using different training objective functions, the proposed learnable sparse encoders are not only restricted to be approximants of the exact sparse code for a pre-given dictionary, but can be rather used as full-featured sparse encoders or even modelers. A simple implementation shows several orders of magnitude speedup compared to the state-of-the-art exact optimization algorithms at minimal performance degradation, making the proposed framework suitable for real time and large-scale applications. |

|||||||||||||||||||

|

I. Kokkinos, M. M. Bronstein, R. Litman, A. M. Bronstein,

"Intrinsic shape context descriptors for deformable shapes",

Proc. Computer Vision and Pattern Recognition (CVPR), 2012. Abstract: In this work, we present intrinsic shape context (ISC) descriptors for 3D shapes. We generalize to surfaces the polar sampling of the image domain used in shape contexts; for this purpose, we chart the surface by shooting geodesic outwards from the point being analyzed; ‘angle’ is treated as tantamount to geodesic shooting direction, and radius as geodesic distance. To deal with orientation ambiguity, we exploit properties of the Fourier transform. Our charting method is intrinsic, i.e., invariant to isometric shape transformations. The resulting descriptor is a meta-descriptor that can be applied to any photometric or geometric property field defined on the shape, in particular, we can leverage recent developments in intrinsic shape analysis and construct ISC based on state-of-the-art dense shape descriptors such as heat kernel signatures. Our experiments demonstrate a notable improvement in shape matching on standard benchmarks. |

|||||||||||||||||||

|

E. Rodola, A. M. Bronstein, A. Albarelli, F. Bergamasco, A. Torsello,

"A game-theoretic approach to deformable shape matching",

Proc. Computer Vision and Pattern Recognition (CVPR), 2012. Abstract: We consider the problem of minimum distortion intrinsic correspondence between deformable shapes, many useful formulations of which give rise to the NP-hard quadratic assignment problem (QAP). Previous attempts to use the spectral relaxation have had limited success due to the lack of sparsity of the obtained “fuzzy” solution. In this paper, we adopt the recently introduced alternative L1 relaxation of the QAP based on the principles of game theory. We relate it to the Gromov and Lipschitz metrics between metric spaces and demonstrate on state-of-the-art benchmarks that the proposed approach is capable of finding very accurate sparse correspondences between deformable shapes. |

|||||||||||||||||||

|

A. Zabatani, A. M. Bronstein,

"Parallelized algorithms for rigid surface alignment on GPU",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2012. Abstract: Alignment and registration of rigid surfaces is a fundamental computational geometric problem with applications ranging from medical imaging, automated target recognition, and robot navigation just to mention a few. The family of the iterative closest point (ICP) algorithms introduced by Chen and Medioni and Besl and McKey and improved over the three decades that followed constitute a classical to the problem. However, with the advent of geometry acquisition technologies and applications they enable, it has become necessary to align in real time dense surfaces containing millions of points. The classical ICP algorithms, being essentially sequential procedures, are unable to address the need. In this study, we follow the recent work by Mitra et al. considering ICP from the point of view of point-to-surface Euclidean distance map approximation. We propose a variant of a k-d tree data structure to store the approximation, and show its efficient parallelization on modern graphics processors. The flexibility of our implementation allows using different distance approximation schemes with controllable trade-off between accuracy and complexity. It also allows almost straightforward adaptation to richer transformation groups. Experimental evaluation of the proposed approaches on a state-of-the-art GPU on very large datasets containing around 106 vertices shows real-time performance superior by up to three orders of magnitude compared to an efficient CPU-based version. Resources: code (anonymous svn) - for non-commercial use only! |

|||||||||||||||||||

|

G. Rosman, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Articulated motion segmentation of point clouds by group-valued regularization",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2012. Abstract: Motion segmentation for articulated objects is an important topic of research. Yet such a segmentation should be as free as possible from underlying assumptions so as to fit general scenes and objects. In this paper we demonstrate an algorithm for articulated motion segmentation of 3D point clouds, free of any assumptions on the underlying model and yet firmly set in a well-defined variational framework. Results on scanned images show the generality of the proposed technique and its robustness to scanning artifacts and noise. |

|||||||||||||||||||

|

A. Kovnatsky, M. M. Bronstein, A. M. Bronstein, D. Raviv, R. Kimmel,

"Affine-invariant photometric heat kernel signatures",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2012. Abstract: In this paper, we explore the use of the diffusion geometry framework for the fusion of geometric and photometric information in local shape descriptors. Our construction is based on the definition of a modified metric, which combines geometric and photometric information, and then the diffusion process on the shape manifold is simulated. Experimental results show that such data fusion is useful in coping with shape retrieval experiments, where pure geometric and pure photometric methods fail. Apart from retrieval task the proposed diffusion process may be employed in other applications. |

|||||||||||||||||||

|

J. Pokrass, A. M. Bronstein, M. M. Bronstein,

"A correspondence-less approach to matching of

deformable shapes",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: Finding a match between partially available deformable shapes is a challenging problem with numerous applications. The problem is usually approached by computing local descriptors on a pair of shapes and then establishing a point-wise correspondence between the two. In this paper, we introduce an alternative correspondence-less approach to matching fragments to an entire shape undergoing a non-rigid deformation. We use diffusion geometric descriptors and optimize over the integration domains on which the integral descriptors of the two parts match. The problem is regularized using the Mumford-Shah functional.We show an efficient discretization based on the Ambrosio-Tortorelli approximation generalized to triangular meshes. Experiments demonstrating the success of the proposed method are presented. |

|||||||||||||||||||

|

A. Kovnatsky, M. M. Bronstein, A. M. Bronstein, R. Kimmel,

"Photometric heat kernel signatures",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: In this paper, we explore the use of the diffusion geometry framework for the fusion of geometric and photometric information in local heat kernel signature shape descriptors. Our construction is based on the definition of a diffusion process on the shape manifold embedded into a high-dimensional space where the embedding coordinates represent the photometric information. Experimental results show that such data fusion is useful in coping with different challenges of shape analysis where pure geometric and pure photometric methods fail. |

|||||||||||||||||||

|

J. Aflalo, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Deformable shape retrieval by learning diffusion

kernels",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: In classical signal processing, it is common to analyze and process signals in the frequency domain, by representing the signal in the Fourier basis, and filtering it by applying a transfer function on the Fourier coefficients. In some applications, it is possible to design an optimal filter. A classical example is the Wiener filter that achieves a minimum mean squared error estimate for signal denoising. Here, we adopt similar concepts to construct optimal diffusion geometric shape descriptors. The analogy of Fourier basis are the eigenfunctions of the Laplace-Beltrami operator, in which many geometric constructions such as diffusion metrics, can be represented. By designing a filter of the Laplace-Beltrami eigenvalues, it is theoretically possible to achieve invariance to different shape transformations, like scaling. Given a set of shape classes with different transformations, we learn the optimal filter by minimizing the ratio between knowingly similar and knowingly dissimilar diffusion distances it induces. The output of the proposed framework is a filter that is optimally tuned to handle transformations that characterize the training set. |

|||||||||||||||||||

|

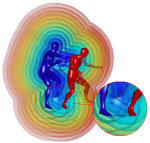

G. Rosman, M. M. Bronstein, A. M. Bronstein, A. Wolf, R. Kimmel,

"Group-valued regularization framework for motion

segmentation of dynamic non-rigid shapes",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: Understanding of articulated shape motion plays an important role in many applications in the mechanical engineering, movie industry, graphics, and vision communities. In this paper, we study motion-based segmentation of articulated 3D shapes into rigid parts. We pose the problem as finding a group-valued map between the shapes describing the motion, forcing it to favor piecewise rigid motions. Our computation follows the spirit of the Ambrosio-Tortorelli scheme for Mumford-Shah segmentation, with a diffusion component suited for the group nature of the motion model. Experimental results demonstrate the effectiveness of the proposed method in non-rigid motion segmentation. |

|||||||||||||||||||

|

C. Wang, M. M. Bronstein, A. M. Bronstein, N. Paragios,

"Discrete minimum distortion correspondence

problems for non-rigid shape matching",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: Similarity and correspondence are two fundamental archetype problems in shape analysis, encountered in numerous application in computer vision and pattern recognition. Many methods for shape similarity and correspondence boil down to the minimum-distortion correspondence problem, in which two shapes are endowed with certain structure, and one attempts to find the matching with smallest structure distortion between them. Defining structures invariant to some class of shape transformations results in an invariant minimum-distortion correspondence or similarity. In this paper, we model shapes using local and global structures, formulate the invariant correspondence problem as binary graph labeling, and show how different choice of structure results in invariance under various classes of deformations. |

|||||||||||||||||||

|

A. Hooda, M. M. Bronstein, A. M. Bronstein, R. Horaud,

"Shape palindromes: analysis of intrinsic

symmetries in 2D articulated shapes",

Proc. Scale Space and Variational Methods (SSVM), 2011. Abstract: Analysis of intrinsic symmetries of non-rigid and articulated shapes is an important problem in pattern recognition with numerous applications ranging from medicine to computational aesthetics. Considering articulated planar shapes as closed curves, we show how to represent their extrinsic and intrinsic symmetries as self-similarities of local descriptor sequences, which in turn have simple interpretation in the frequency domain. The problem of symmetry detection and analysis thus boils down to analysis of descriptor sequence patterns. For that purpose, we show two efficient computational methods: one based on Fourier analysis, and another on dynamic programming. Metaphorically, the later can be compared to finding palindromes in text sequences. |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, N. Sochen,

"Affine-invariant diffusion geometry for the analysis of deformable 3D shapes",

Proc. Computer Vision and Pattern Recognition (CVPR), 2011. Abstract: We introduce an (equi-)affine invariant diffusion geometry by which surfaces that go through squeeze and shear transformations can still be properly analyzed. The definition of an affine invariant metric enables us to construct an invariant Laplacian from which local and global geometric structures are extracted. Applications of the proposed framework demonstrate its power in generalizing and enriching the existing set of tools for shape analysis. |

|||||||||||||||||||

|

F. Michel, M. M. Bronstein, A. M. Bronstein, N. Paragios,

"Boosted metric learning for 3D multi-modal deformable registration ",

Proc. Intl. Symposium on Biomed. Imag. (ISBI), 2011. Abstract: Defining a suitable metric is one of the biggest challenges in deformable image fusion from different modalities. In this paper, we propose a novel approach for multi-modal metric learning in the deformable registration framework that consists of embedding data from both modalities into a common metric space whose metric is used to parametrize the similarity. Specifically, we use image representation in the Fourier/Gabor space which introduces invariance to the local pose parameters, and the Hamming metric as the target embedding space, which allows constructing the embedding using boosted learning algorithms. The resulting metric is incorporated into a discrete optimization framework. Very promising results demonstrate the potential of the proposed method. |

|||||||||||||||||||

|

D. Raviv, M. M. Bronstein, A. M. Bronstein, R. Kimmel,

"Volumetric heat kernel signatures",

Proc. Intl. Workshop on 3D Object Retrieval, ACM Multimedia, 2010. Abstract: Invariant shape descriptors are instrumental in numerous shape analysis tasks including deformable shape comparison, registration, classification, and retrieval. Most existing constructions model a 3D shape as a two-dimensional surface describing the shape boundary, typically represented as a triangular mesh or a point cloud. Using intrinsic properties of the surface, invariant descriptors can be designed. One such example is the recently introduced heat kernel signature, based on the Laplace-Beltrami operator of the surface. In many applications, however, a volumetric shape model is more natural and convenient. Moreover, modeling shape deformations as approximate isometries of the volume of an object, rather than its boundary, better captures natural behavior of non-rigid deformations in many cases. Here, we extend the idea of heat kernel signature to robust isometry-invariant volumetric descriptors, and show their utility in shape retrieval. The proposed approach achieves state-of-the-art results on the SHREC 2010 large-scale shape retrieval benchmark. |

|||||||||||||||||||

|

N. Mitra, A. M. Bronstein, M. M. Bronstein,

"Intrinsic regularity detection in 3D geometry",

Proc. European Conf. Computer Vision (ECCV), 2010. Abstract: Automatic detection of symmetries, regularity, and repetitive structures in 3D geometry is a fundamental problem in shape analysis and pattern recognition with applications in computer vision and graphics. Especially challenging is to detect intrinsic regularity, where the repetitions are on an intrinsic grid, without any apparent Euclidean pattern to describe the shape, but rising out of (near) isometric deformation of the underlying surface. In this paper, we employ multidimensional scaling to reduce the problem of intrinsic structure detection to a simpler problem of 2D grid detection. Potential 2D grids are then identified using an autocorrelation analysis, refined using local fitting, validated, and finally projected back to the spatial domain. We test the detection algorithm on a variety of scanned plaster models in presence of imperfections like missing data, noise and outliers. We also present a range of applications including scan completion, shape editing, super-resolution, and structural correspondence. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein,

"Spatially-sensitive affine-invariant image descriptors",

Proc. European Conf. Computer Vision (ECCV), 2010. Abstract: Invariant image descriptors play an important role in many computer vision and pattern recognition problems such as image search and retrieval. A dominant paradigm today is that of "bags of features", a representation of images as distributions of primitive visual elements. The main disadvantage of this approach is the loss of spatial relations between features, which often carry important information about the image. In this paper, we show how to construct spatially-sensitive image descriptors in which both the features and their relation are affine-invariant. Our construction is based on a vocabulary of pairs of features coupled with a vocabulary of invariant spatial relations between the features. Experimental results show the advantage of our approach in image retrieval applications. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein, F. Michel, N. Paragios,

"Data fusion through cross-modality metric learning using similarity-sensitive hashing",

Proc. Computer Vision and Pattern Recognition (CVPR), 2010. Abstract: Visual understanding is often based on measuring similarity between observations. Learning similarities specific to a certain perception task from a set of examples has been shown advantageous in various computer vision and pattern recognition problems. In many important applications, the data that one needs to compare come from different representations or modalities, and the similarity between such data operates on objects that may have different and often incommensurable structure and dimensionality. In this paper, we propose a framework for supervised similarity learning based on embedding the input data from two arbitrary spaces into the Hamming space. The mapping is expressed as a binary classification problem with positive and negative examples, and can be efficiently learned using boosting algorithms. The utility and efficiency of such a generic approach is demonstrated on several challenging applications including cross-representation shape retrieval and alignment of multi-modal medical images. Resources: CVPR trailer video |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, U. Castellani, B. Falcidieno, A. Fusiello, A. Godil,

L. J. Guibas, I. Kokkinos, Z. Lian, M. Ovsjanikov, G. Patané, M. Spagnuolo, R. Toldo,

"SHREC 2010: robust large-scale shape retrieval benchmark",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2010. Abstract: SHREC’10 robust large-scale shape retrieval benchmark simulates a retrieval scenario, in which the queries include multiple modifications and transformations of the same shape. The benchmark allows evaluating how algorithms cope with certain classes of transformations and what is the strength of the transformations that can be dealt with. The present paper is a report of the SHREC’10 robust large-scale shape retrieval benchmark results. Resources: SHREC robust large-scale shape retrieval benchmark |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, B. Bustos, U. Castellani, M. Crisani, B. Falcidieno,

L. J. Guibas, I. Kokkinos, V. Murino, M. Ovsjanikov, G. Patané, I. Sipiran, M. Spagnuolo, J. Sun,

"SHREC 2010: robust feature detection and description benchmark",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2010. Abstract: Feature-based approaches have recently become very popular in computer vision and image analysis application, and are becoming a promising direction in shape retrieval applications. SHREC’10 robust feature detection and description benchmark simulates feature detection and description stage of feature-based shape retrieval algorithms. The benchmark tests the performance of shape feature detectors and descriptors under a wide variety of different transformations. The benchmark allows evaluating how algorithms cope with certain classes of transformations and what is the strength of the transformations that can be dealt with. The present paper is a report of the SHREC’10 robust feature detection and description benchmark results. Resources: SHREC robust feature detection and description benchmark |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, U. Castellani, A. Dubrovina, L. J. Guibas, R. P. Horaud, R. Kimmel,

D. Knossow, E. von Lavante, D. Mateus, M. Ovsjanikov, A. Sharma,

"SHREC 2010: robust correspondence benchmark",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2010. Abstract: SHREC’10 robust correspondence benchmark simulates a one-to-one shape matching scenario, in which one of the shapes undergoes multiple modifications and transformations. The benchmark allows evaluating how correspondence algorithms cope with certain classes of transformations and what is the strength of the transformations that can be dealt with. The present paper is a report of the SHREC’10 robust correspondence benchmark results. Resources: SHREC robust correspondence benchmark |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, G. Sapiro,

"Diffusion symmetries of non-rigid shapes",

Proc. Intl. Symposium on 3D Data Processing, Visualization and Transmission (3DPVT), 2010. Abstract: Detection and modeling of self-similarity and symmetry is important in shape recognition, matching, synthesis, and reconstruction. While the detection of rigid shape symmetries is well-established, the study of symmetries in non- rigid shapes is a much less researched problem. A particularly challenging setting is the detection of symmetries in non-rigid shapes affected by topological noise and asymmetric connectivity. In this paper, we treat shapes as metric spaces, with the metric induced by heat diffusion properties, and define non-rigid symmetries as self-isometries with respect to the diffusion metric. Experimental results show the advantage of the diffusion metric over the previously proposed geodesic metric for exploring intrinsic symmetries of bendable shapes with possible topological irregularities. |

|||||||||||||||||||

|

M. Ovsjanikov, A. M. Bronstein, M. M. Bronstein, L. Guibas,

"ShapeGoogle: a computer vision approach for invariant shape retrieval",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2009. Abstract: Feature-based methods have recently gained popularity in computer vision and pattern recognition communities, in applications such as object recognition and image retrieval. In this paper, we explore analogous approaches in the 3D world applied to the problem of non-rigid shape search and retrieval in large databases. |

|||||||||||||||||||

|

Y. Devir, G. Rosman, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"On reconstruction of non-rigid shapes with intrinsic regularization",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2009. Abstract: Shape-from-X is a generic type of inverse problems in computer vision, in which a shape is reconstructed from some measurements. A specially challenging setting of this problem is the case in which the reconstructed shapes are non-rigid. In this paper, we propose a framework for intrinsic regularization of such problems. The assumption is that we have the geometric structure of a shape which is intrinsically (up to bending) similar to the one we would like to reconstruct. For that goal, we formulate a variation with respect to vertex coordinates of a triangulated mesh approximating the continuous shape. The numerical core of the proposed method is based on differentiating the fast marching update step for geodesic distance computation. |

|||||||||||||||||||

|

O. Rubinstein, Y. Honen, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"3D color video camera",

Proc. Workshop on 3D Digital Imaging and Modeling (3DIM), 2009. Abstract: We introduce a design of a coded light-based 3D color video camera optimized for build up cost as well as accuracy in depth reconstruction and acquisition speed. The components of the system include a monochromatic camera and an off-the-shelf LED projector synchronized by a miniature circuit. The projected patterns are captured and processed at a rate of 200 fps and allow for real-time reconstruction of both depth and color at video rates. The reconstruction and display are performed at around 30 depth profiles and color texture per second using a graphics processing unit (GPU). |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein,

"Regularized partial matching of rigid shapes",

Proc. European Conf. Computer Vision (ECCV), pp. 143-154, 2008. Abstract: Matching of rigid shapes is an important problem in numerous applications across the boundary of computer vision, pattern recognition and computer graphics communities. A particularly challenging setting of this problem is partial matching, where the two shapes are dissimilar in general, but have significant similar parts. In this paper, we show a rigorous approach allowing to find matching parts of rigid shapes with controllable size and regularity. The regularity term we use is similar to the spirit of the Mumford-Shah functional, extended to non-Euclidean spaces. Numerical experiments show that the regularized partial matching produces better results compared to the non-regularized one. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein,

"Not only size matters: regularized partial matching of nonrigid shapes",

Proc. Computer Vision and Pattern Recognition (CVPR), Workshop on Nonrigid Shape Analysis and Deformable Image Registration (NORDIA), 2008. Abstract: Partial matching is probably one of the most challenging problems in nonrigid shape analysis. The problem consists of matching similar parts of shapes that are dissimilar on the whole and can assume different forms by undergoing nonrigid deformations. Conceptually, two shapes can be considered partially matching if they have significant similar parts, with the simplest definition of significance being the size of the parts. Thus, partial matching can be defined as a multcriterion optimization problem trying to simultaneously maximize the similarity and the size of these parts. In this paper, we propose a different definition of significance, taking into account the regularity of parts besides their size. The regularity term proposed here is similar to the spirit of the Mumford-Shah functional. Numerical experiments show that the regularized partial matching produces semantically better results compared to the non-regularized one. |

|||||||||||||||||||

|

R. Giryes, A. M. Bronstein, Y. Moshe, M. M. Bronstein,

"Embedded System for 3D Shape Reconstruction",

In Proc. European DSP Education and Research Symposium (EDERS), 2008. Abstract: Many applications that use three-dimensional scanning require a low cost, accurate and fast solution. This paper presents a fixed-point implementation of a real time active stereo threedimensional acquisition system on a Texas Instruments DM6446 EVM board which meets these requirements. A time-multiplexed structured light reconstruction technique is described and a fixed point algorithm for its implementation is proposed. This technique uses a standard camera and a standard projector. The fixed point reconstruction algorithm runs on the DSP core while the ARM controls the DSP and is responsible for communication with the camera and projector. The ARM uses the projector to project coded light and the camera to capture a series of images. The captured data is sent to the DSP. The DSP, in turn, performs the 3D reconstruction and returns the results to the ARM for storing. The inter-core communication is performed using the xDM interface and VISA API. Performance evaluation of a fully working prototype proves the feasibility of a fixed-point embedded implementation of a real time three-dimensional scanner, and the suitability of the DM6446 chip for such a system. |

|||||||||||||||||||

|

G. Rosman, A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Topologically constrained isometric embedding",

In Human Motion Understanding, Modelling, Capture, and Animation, Computational Imaging and Vision, Vol. 36, Springer, pp. 243-262, 2008. Abstract: We present a new algorithm for nonlinear dimensionality reduction that consistently uses global information, which enables understanding the intrinsic geometry of non-convex manifolds. Compared to methods that consider only local information, our method appears to be more robust to noise. We demonstrate the performance of our algorithm and compare it to state-of-the-art methods on synthetic as well as real data. |

|||||||||||||||||||

|

D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, "Symmetries of non-rigid shapes", Proc. Workshop on Non-rigid Registration and Tracking through Learning (NRTL), 2007. Abstract: Symmetry and self-similarity is the cornerstone of Nature, exhibiting itself through the shapes of natural creations and ubiquitous laws of physics. Since many natural objects are symmetric, the absence of symmetry can often be an indication of some anomaly or abnormal behavior. Therefore, detection of asymmetries is important in numerous practical applications, including crystallography, medical imaging, and face recognition, to mention a few. Conversely, the assumption of underlying shape symmetry can facilitate solutions to many problems in shape reconstruction and analysis. Traditionally, symmetries are described as extrinsic geometric properties of the shape. While being adequate for rigid shapes, such a description is inappropriate for non-rigid ones. Extrinsic symmetry can be broken as a result of shape deformations, while its intrinsic symmetry is preserved. In this paper, we pose the problem of finding intrinsic symmetries of non-rigid shapes and propose an efficient method for their computation. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel, "Rock, Paper, and Scissors: extrinsic vs. intrinsic similarity of non-rigid shapes", Proc. Intl. Conf. Computer Vision (ICCV), 2007. Abstract: This paper explores similarity criteria between non-rigid shapes. Broadly speaking, such criteria are divided into intrinsic and extrinsic, the first referring to the metric structure of the objects and the latter to the geometry of the shapes in the Euclidean space. Both criteria have their advantages and disadvantages; extrinsic similarity is sensitive to non-rigid deformations of the shapes, while intrinsic similarity is sensitive to topological noise. Here, we present an approach unifying both criteria in a single distance. Numerical results demonstrate the robustness of our approach in cases where using only extrinsic or intrinsic criteria fail. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, A. M. Bruckstein, R. Kimmel, "Paretian similarity for partial comparison of non-rigid objects", Proc. Conf. on Scale Space and Variational Methods in Computer Vision, pp. 264-275, 2007. Abstract: In this paper, we address the problem of partial comparison of non-rigid objects. We introduce a new class of set-valued distances, related to the concept of Pareto optimality in economics. Such distances allow to capture intrinsic geometric similarity between parts of non-rigid objects, obtaining semantically meaningful comparison results. The numerical implementation of our method is computationally efficient and is similar to GMDS, a multidimensional scaling-like continuous optimization problem. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, A. M. Bruckstein, R. Kimmel, "Partial similarity of objects and text sequences", Proc. Information Theory and Applications Workshop, San Diego, 2007. Abstract: Similarity is one of the most important abstract concepts in the human perception of the world. In computer vision, numerous applications deal with comparing objects observed in a scene with some a priori known patterns. Often, it happens that while two objects are not similar, they have large similar parts, that is, they are partially similar. Here, we present a novel approach to quantify this semantic definition of partial similarity using the notion of Pareto optimality. We exemplify our approach on the problems of recognizing non-rigid objects and analyzing text sequences. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, A. M. Bruckstein, R. Kimmel,

"Matching two-dimensional articulated shapes using generalized multidimensional scaling",

Proc. Conf. on Articulated Motion and Deformable Objects (AMDO), pp. 48-57, 2006. Abstract: We present a theoretical and computational framework for matching of two-dimensional articulated shapes. Assuming that articulations can be modeled as near-isometries, we show an axiomatic construction of an articulation-invariant distance between shapes, formulated as a generalized multidimensional scaling (GMDS) problem and solved efficiently. Some numerical results demonstrating the accuracy of our method are presented. Resources: 2D tools dataset |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Face2Face: an isometric model for facial animation",

Proc. Conf. on Articulated Motion and Deformable Objects (AMDO), pp. 38-47, 2006. Abstract: A geometric framework for finding intrinsic correspondence between animated 3D faces is presented. We model facial expressions as isometries of the facial surface and find the correspondence between two faces as the minimum-distortion mapping. Generalized multidimensional scaling is used for this goal. We apply our approach to texture mapping onto 3D video, expression exaggeration and morphing between faces. Resources: 3D face video |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Robust expression-invariant face recognition from partially missing data",

Proc. European Conf. on Computer Vision (ECCV), pp. 396-408, 2006. Abstract: Recent studies on three-dimensional face recognition proposed to model facial expressions as isometries of the facial surface. Based on this model, expression-invariant signatures of the face were constructed by means of approximate isometric embedding into flat spaces. Here, we apply a new method for measuring isometry-invariant similarity between faces by embedding one facial surface into another. We demonstrate that our approach has several significant advantages, one of which is the ability to handle partially missing data. Promising face recognition results are obtained in numerical experiments even when the facial surfaces are severely occluded. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky,

"On separation of semitransparent dynamic images from static background",

Proc. Intl. Conf. on Independent Component Analysis and Blind Signal Separation, pp. 934-940, 2006. Abstract: Presented here is the problem of recovering a dynamic image superimposed on a static background. Such a problem is ill-posed and may arise e.g. in imaging through semireflective media, in separation of an illumination image from a reflectance image, in imaging with diffraction phenomena, etc. In this work we study regularization of this problem in spirit of Total Variation and general sparsifying transformations. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Expression-invariant face recognition via spherical embedding",

Proc. Intl. Conf. on Image Processing (ICIP), Vol. 3, pp. 756-759, 2005. Abstract: Recently, it was proven empirically that facial expressions can be modelled as isometries, that is, geodesic distances on the facial surface were shown to be significantly less sensitive to facial expressions compared to Euclidean ones. Based on this assumption, the 3DFACE face recognition system was built. The system efficiently computes expression invariant signatures based on isometry-invariant representation of the facial surface. One of the crucial steps in the recognition system was embedding of the face geometric structure into a Euclidean (flat) space. Here, we propose to replace the flat embedding by a spherical one to construct isometric invariant representations of the facial image. We refer to these new invariants as spherical canonical images. Compared to its Euclidean counterpart, spherical embedding leads to notably smaller metric distortion. We demonstrate experimentally that representations with lower embedding error lead to better recognition. In order to efficiently compute the invariants we introduce a dissimilarity measure between the spherical canonical images based on the spherical harmonic transform. |

|||||||||||||||||||

|

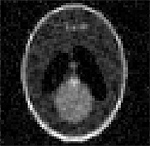

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Unmixing tissues: sparse component analysis in multi-contrast MRI",

Proc. Intl. Conf. on Image Processing (ICIP), Vol. 2, pp. 1282-1285, 2005. Abstract: We pose the problem of tissue classification in MRI as a blind source separation (BSS) problem and solve it by means of sparse component analysis (SCA). Assuming that most MR images can be sparsely represented, we consider their optimal sparse representation. Sparse components define a physically-meaningful feature space for classification. We demonstrate our approach on simulated and real multi-contrast MRI data. The proposed framework is general in that it is applicable to other modalities of medical imaging as well, whenever the linear mixing model is applicable. |

|||||||||||||||||||

|

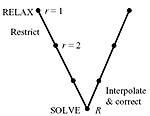

M. M. Bronstein, A. M. Bronstein, R. Kimmel, I. Yavneh,

"A multigrid approach for multi-dimensional scaling",

Proc. Copper Mountain Conf. Multigrid Methods, 2005. Best Paper Award. Abstract: A multigrid approach for the efficient solution of large-scale multidimensional scaling (MDS) problems is presented. The main motivation is a recent application of MDS to isometry-invariant representation of surfaces, in particular, for expression-invariant recognition of human faces. Simulation results show that the proposed approach significantly outperforms conventional MDS algorithms. Resources: Multigrid MDS code (MATLAB) | Tutorial (MATLAB) |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, R. Kimmel,

"Isometric embedding of facial surfaces into $S^3$",

Proc. Intl. Conf. on Scale Space and PDE Methods in Computer Vision, pp. 622-631, 2005. Abstract: The problem of isometry-invariant representation and comparison of surfaces is of cardinal importance in pattern recognition applications dealing with deformable objects. Particularly, in three-dimensional face recognition treating facial expressions as isometries of the facial surface allows to perform robust recognition insensitive to expressions. Isometry-invariant representation of surfaces can be constructed by isometrically embedding them into some convenient space, and carrying out the comparison in that space. Presented here is a discussion on isometric embedding into $S^3$, which appears to be superior over the previously used Euclidean space in sense of the representation accuracy. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, E. Gordon, R. Kimmel,

"Fusion of 2D and 3D data in three-dimensional face recognition",

Proc. Intl. Conf. on Image Processing (ICIP), pp. 87-90, 2004. Abstract: We discuss the synthesis between the 3D and the 2D data in three-dimensional face recognition. We show how to compensate for the illumination and facial expressions using the 3D facial geometry and present the approach of canonical images, which allows to incorporate geometric information into standard face recognition approaches. |

|||||||||||||||||||

|

M. M. Bronstein, A. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Optimal sparse representations for blind source separation and blind deconvolution: a learning approach", Proc. Intl. Conf. on Image Processing (ICIP), pp. 1815-1818, 2004. Abstract: We present a generic approach, which allows to adapt sparse blind deconvolution and blind source separation algorithms to arbitrary sources. The key idea is to bring the problem to the case in which the underlying sources are sparse by applying a sparsifying transformation on the mixtures. We present simulation results and show that such transformation can be found by training. Properties of the optimal sparsifying transformation are highlighted by an example with aerial images. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"Fast relative Newton algorithm for blind deconvolution of images", Proc. Intl. Conf. on Image Processing (ICIP), pp. 1233-1236, 2004. Abstract: We present an efficient Newton-like algorithm for quasimaximum likelihood (QML) blind deconvolution of images. This algorithm exploits the sparse structure of the Hessian. An optimal distribution-shaping approach by means of sparsification allows one to use simple and convenient sparsity prior for processing of a wide range of natural images. Simulation results demonstrate the efficiency of the proposed method. |

|||||||||||||||||||

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky,

"Blind source separation using the block-coordinate relative Newton method",

Proc. Intl. Conf. on Independent Component Analysis and Blind Signal Separation, Lecture Notes in Comp. Science No. 3195, Springer, pp. 406-413, 2004. Abstract: Presented here is a generalization of the modified relative Newton method, recently proposed by Zibulevsky for quasi-maximum likelihood blind source separation. Special structure of the Hessian matrix allows to perform block-coordinate Newton descent, which significantly reduces the algorithm computational complexity and boosts its performance. Simulations based on artificial and real data show that the separation quality using the proposed algorithm outperforms other accepted blind source separation methods. |

|||||||||||||||||||

|

|

A. M. Bronstein, M. M. Bronstein, M. Zibulevsky, Y. Y. Zeevi,

"QML blind deconvolution: asymptotic analysis",