|

ShapeGoogle: Feature-based shape analysis

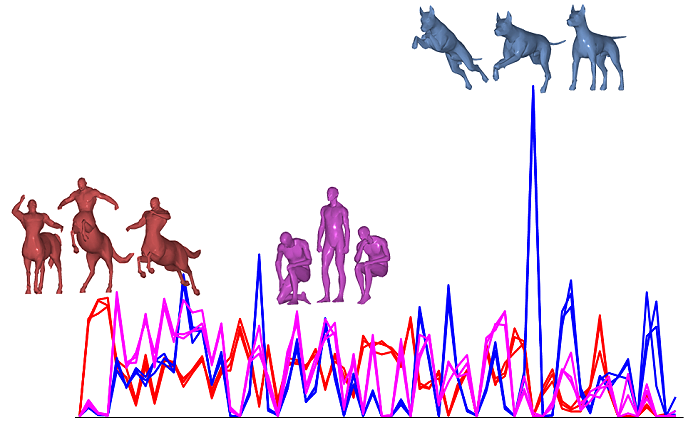

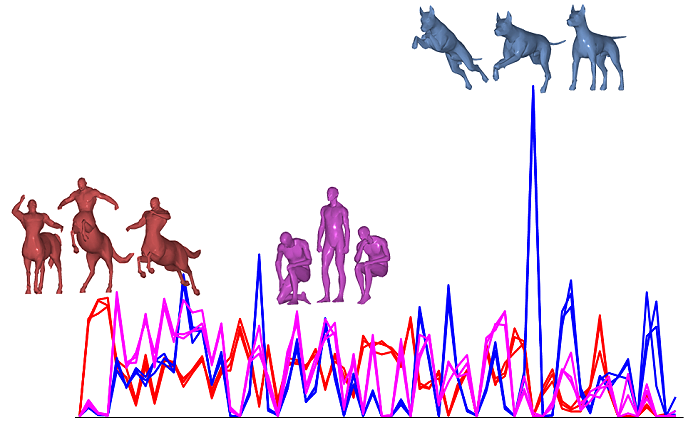

Representation of shapes as bags of features (geometric words) invariant to non-rigid transformations.

The availability of large public-domain databases of 3D models such as Google 3D Warehouse has created the demand for shape search and retrieval algorithms capable of finding similar shapes in the same way a search engine responds to text quires. However, while text search methods are sufficiently good to be ubiquitously use e.g. in Web application, the search and retrieval of 3D shapes is a much more challenging problem. Shape retrieval based on text metadata (annotations and tags added by human) is often insufficient to provide the same experience as a text search engine does.

Content-based shape retrieval using the shape itself as a query and based on comparison of shape properties is complicated by the fact that many 3D objects manifest rich variability, and invariance to different classes of transformations and shape variations is required. One of the most challenging settings addressed in this paper is the case of non-rigid or deformable shapes, in which the class of transformations may be very wide due to the capability of such shapes to bend and assume different forms.

In the image domain, an analogous problem is image retrieval, the problem of finding images depicting similar scenes or objects. Images, as well as three-dimensional shapes, may manifest significant variability.

The computer vision and pattern recognition communities have recently witnessed a wide adoption of feature-based methods in object recognition and image retrieval applications. These methods allow to represent images as collections of "visual words" and treat them using text search approaches such as the "bag of features" paradigm.

Feature-based methods are popular in computer vision and pattern recognition communities, in applications such as object recognition and image retrieval.

We explored analogous approaches in the 3D world applied to the problem of non-rigid shape retrieval in large databases.

Using multiscale diffusion heat kernels as "geometric words", we construct shape descriptors by means of the "bag of features" approach. We also show that considering pairs of spatially-close "geometric words" ("geometric expressions") allows to create spatially-sensitive bags of features with better discriminativity.

Papers

A. M. Bronstein, M. M. Bronstein, M. Ovsjanikov, L. J. Guibas,

"Shape Google: geometric words and expressions for invariant shape retrieval", ACM Trans. Graphics (TOG), to appear.

A. M. Bronstein, M. M. Bronstein, U. Castellani, B. Falcidieno, A. Fusiello, A. Godil,

L. J. Guibas, I. Kokkinos, Z. Lian, M. Ovsjanikov, G. Patané, M. Spagnuolo, R. Toldo,

"SHREC 2010: robust large-scale shape retrieval benchmark",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2010.

A. M. Bronstein, M. M. Bronstein, B. Bustos, U. Castellani, M. Crisani, B. Falcidieno,

L. J. Guibas, I. Kokkinos, V. Murino, M. Ovsjanikov, G. Patané, I. Sipiran, M. Spagnuolo, J. Sun,

"SHREC 2010: robust feature detection and description benchmark",

Proc. EUROGRAPHICS Workshop on 3D Object Retrieval (3DOR), 2010.

M. Ovsjanikov, A. M. Bronstein, M. M. Bronstein, L. J. Guibas,

"ShapeGoogle: a computer vision approach for invariant shape retrieval",

Proc. Workshop on Nonrigid Shape Analysis and Deformable Image Alignment (NORDIA), 2009.

Data

SHREC 2010 datasets

TOSCA datasets

See also

Non-rigid shape similarity and correspondence

Partial similarity and correspondence

|