Coherency Sensitive Hashing (CSH)

[abstract]

[paper]

[presentation]

[source-code]

[data-set]

[performance]

[reconstruction]

Coherency Sensitive Hashing (CSH) extends Locality

Sensitivity Hashing (LSH) and PatchMatch to quickly find

matching patches between two images. LSH relies on hashing,

which maps similar patches to the same bin, in order

to find matching patches. PatchMatch, on the other hand,

relies on the observation that images are coherent, to propagate

good matches to their neighbors, in the image plane.

It uses random patch assignment to seed the initial matching.

CSH relies on hashing to seed the initial patch matching

and on image coherence to propagate good matches.

In addition, hashing lets it propagate information between

patches with similar appearance (i.e., map to the same bin).

This way, information is propagated much faster because it

can use similarity in appearance space or neighborhood in

the image plane. As a result, CSH is at least three to four

times faster than PatchMatch and more accurate, especially

in textured regions, where reconstruction artifacts are most

noticeable to the human eye. We verified CSH on a new,

large scale, data set of 133 image pairs.

Coherency Sensitive Hashing [pdf]

Simon Korman, Shai Avidan

ICCV 2011, Barcelona

[pptx]

Version 1.0 (2.6 MB zip file)

Version 2.0 (2.8 MB zip file)

Version 3.0 (5 MB zip file)

Instructions:

- Unzip the zip file 'CSH_code_v2.zip'

- See the file 'readme.txt' for system requirements, installation procedure and example code

Contents:

The code is writen in Matlab, where the entry point is the simple wrapper function CSH_nn (which has its own help index). It requires only a pair of input images 'A' and 'B'. Different parameters could be set as well (such as patch-width, number of iterations, 'k' for k-nearest-neighbors, whether to calculate in both directions A->B and B->A, and an input mask that prevents the algorithm from using patches in certain areas of the image 'B'), but they will all take default values if not specifically defined. Critical sections of the algorithm are implemented in c++, interfaced by mex functions. These will have to be compiled before usage (a script for that as well as instructions are in the readme file).

Please email me (simon.korman AT gmail.com) regarding any issues you might have found when using the code.

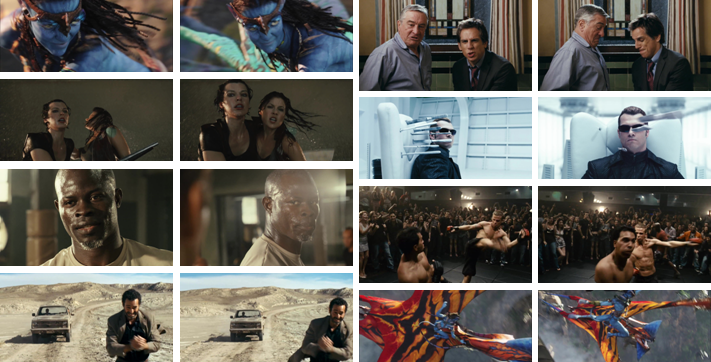

Download (113 MB zip file)

We collected 133 pairs of images, taken from 1080p HD (~2 megapixel) official movie trailers. Each pair consists of images of the same scene with usually some motion of both camera and subjects in the scene (The images are between 1 and ~30 frames apart in the video). We note that pairs of images with only slight camera and subject motion aren't very challenging in the dense patch matching framework and could be handled specifically via registration or optic flow techniques.

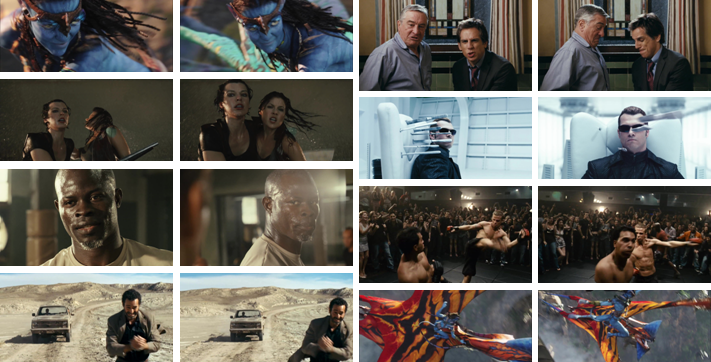

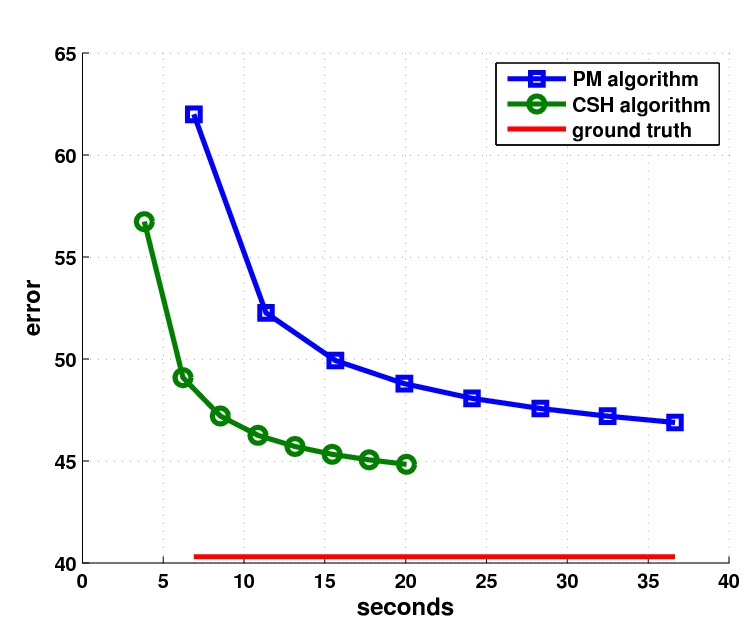

Error/Time tradeoffs of PatchMatch and CSH

Markers on the lines indicate the time it took each algorithm to complete an iteration, and errors are average L 2 distances between matched patches (averaged over all 8x8 patches of the complete VidPairs data-set). Lower error rates (such as those reached by CSH on its third iteration) are reached more than 4 times faster by CSH compared to PatchMatch. Notice that CSH errors are significantly lower and approach the ground truth average error (denoted by solid red line). Similar error/time tradeoffs were observed, when using several sizes of patches and several sizes of images.

This is a comparison of reconstruction capabilities when using different ANNF algorithms as a black-box

(additional examples for section 5.3 of the paper)

Setup

This experiment was about studying how the properties of the different ANNF patch algorithm affect the quality of

image reconstruction, when using these algorithms as a building block. Indeed, such reconstructions are are very

common in patch based applications, especially for image editing (e.g. retargetting, inpainting), image denoising

and super-resolution.

Given a dense patch mapping from image A to image B, we use the reconstruction framework in which every pixel is

reconstructed as a simple average of all corresponding pixels in image B, to which the pixel in A is mapped to by

each of the patches that contained it. This technique has been shown to maximize the (Bi)-Directional Similarity

between the (original and reconstructed) images.

We compare three kinds of mappings under this framework: 1) the actual ground-truth mapping between the images 2)

the mapping produced by CSH (after 5 iterations) 3) the mapping produced by PatchMatch (after 5 iterations).

All images are of a resolution of 0.4 mega-pixels.

Results

The average error comparison, using these 3 mappings, on our 133 image pair data-base was given in the paper (table 2)

and we display it here too:

| |

PatchMatch |

CSH |

ground truth |

| reconstruction RMSE |

7.62 |

6.29 |

5.81 |

In the following, we visually compare the resulting reconstructions. Notice that the PatchMatch reconstruction is

by far less accurate than the "groud-truth" reconstruction, introducing significant blur and color distortions.

The CSH reconstruction isn't much less accurate than the ground-truth reconstruction.

The CSH-based reconstructions are more accurate (compared to PatchMatch), due to the following properties that we overviewed in the paper:

- lower mapping errors

- lower mapping errors the higher the detail /energy/texture-level of the patch

- higher incoherency of the produced mapping

Note: The ground truth reconstruction, is not the original image or the best reconstruction what so ever.

It is the reconstruction which makes use of the ground-truth mapping as a black-box (This is the optimal reconstruction

possible under this framework).

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |

| Image A |

Image B |

|

|

| A reconstructed from B, based on

ground truth /

CSH /

PatchMatch

"A-to-B" dense patch mappings: |